Sharing the story of updating HoloLens app made with HoloToolkit(HTK) to use new Mixed Reality Toolkit v2(MRTK) which supports HoloLens 2’s articulated hand tracking and eye tracking input

This article is based on MRTK 2.0.0 and explains how to bring your MRTK v1(HoloToolkit)-based projects to MRTK v2. To learn more about the latest MRTK releases, please visit MRTK Documentation. http://aka.ms/mrtkdocs

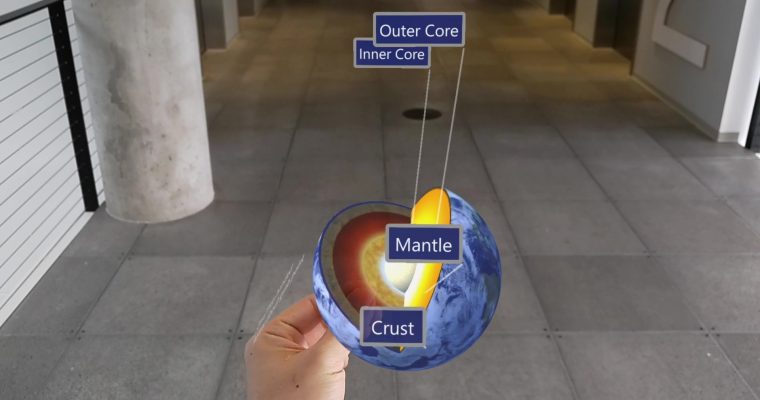

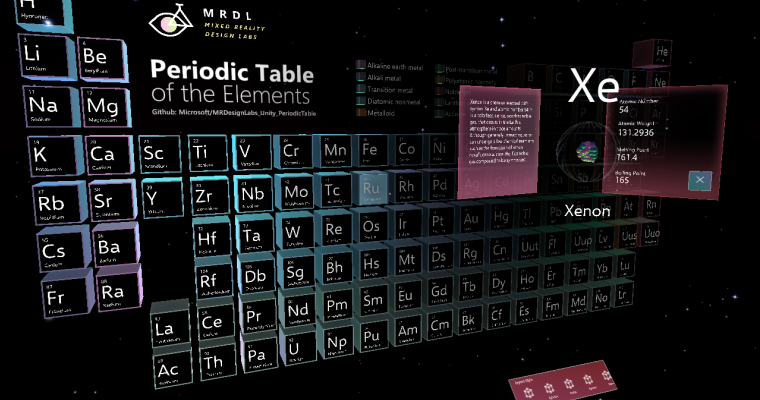

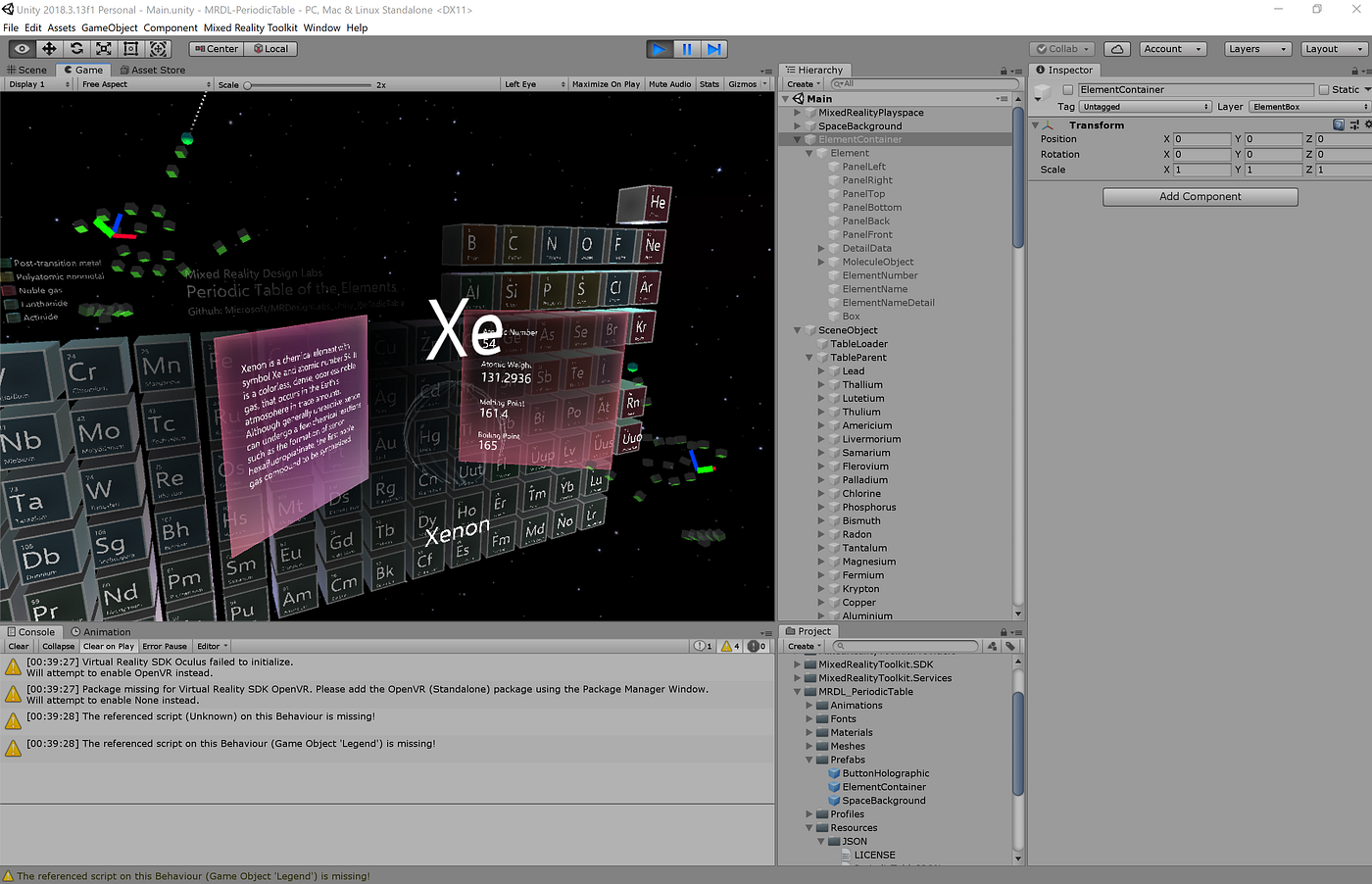

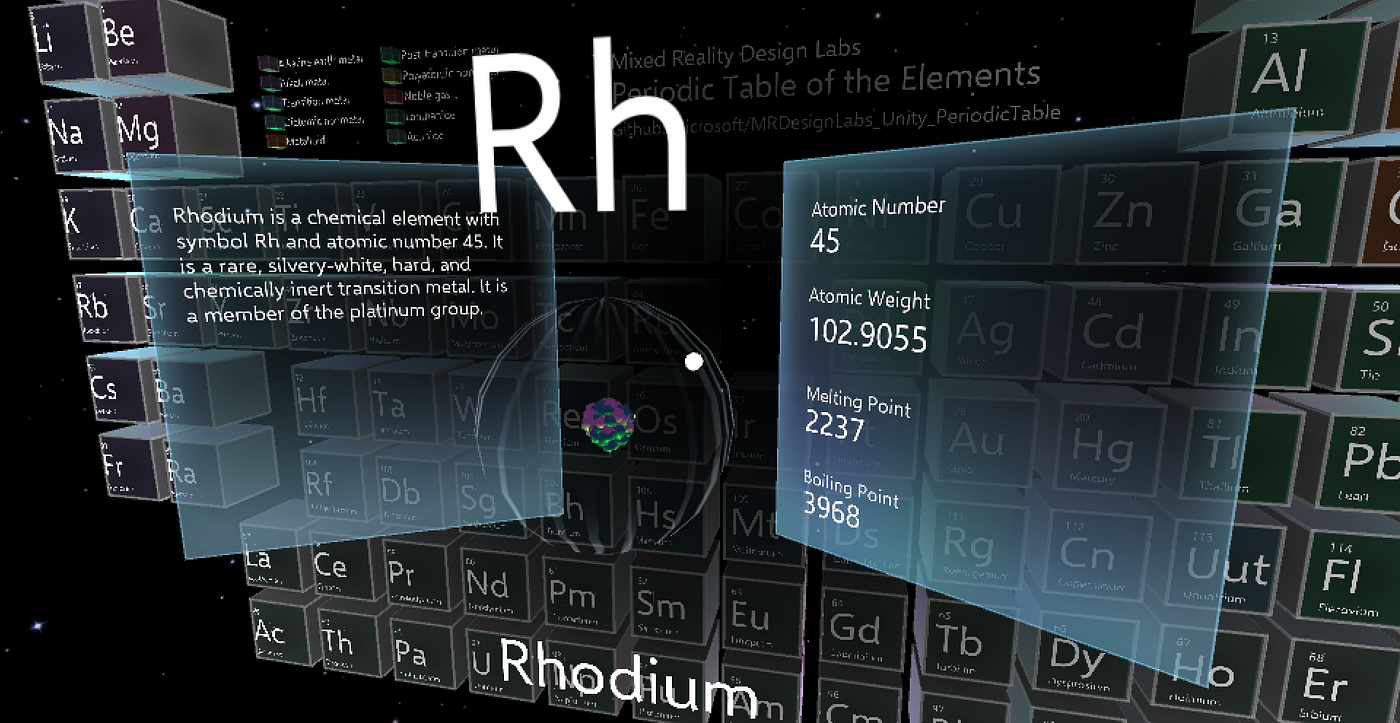

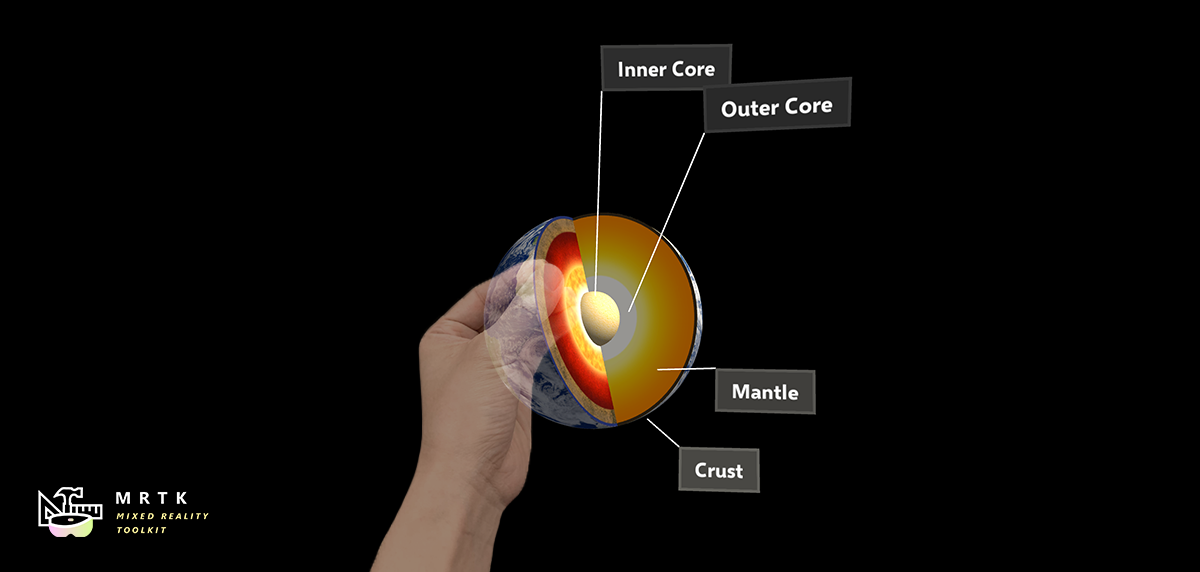

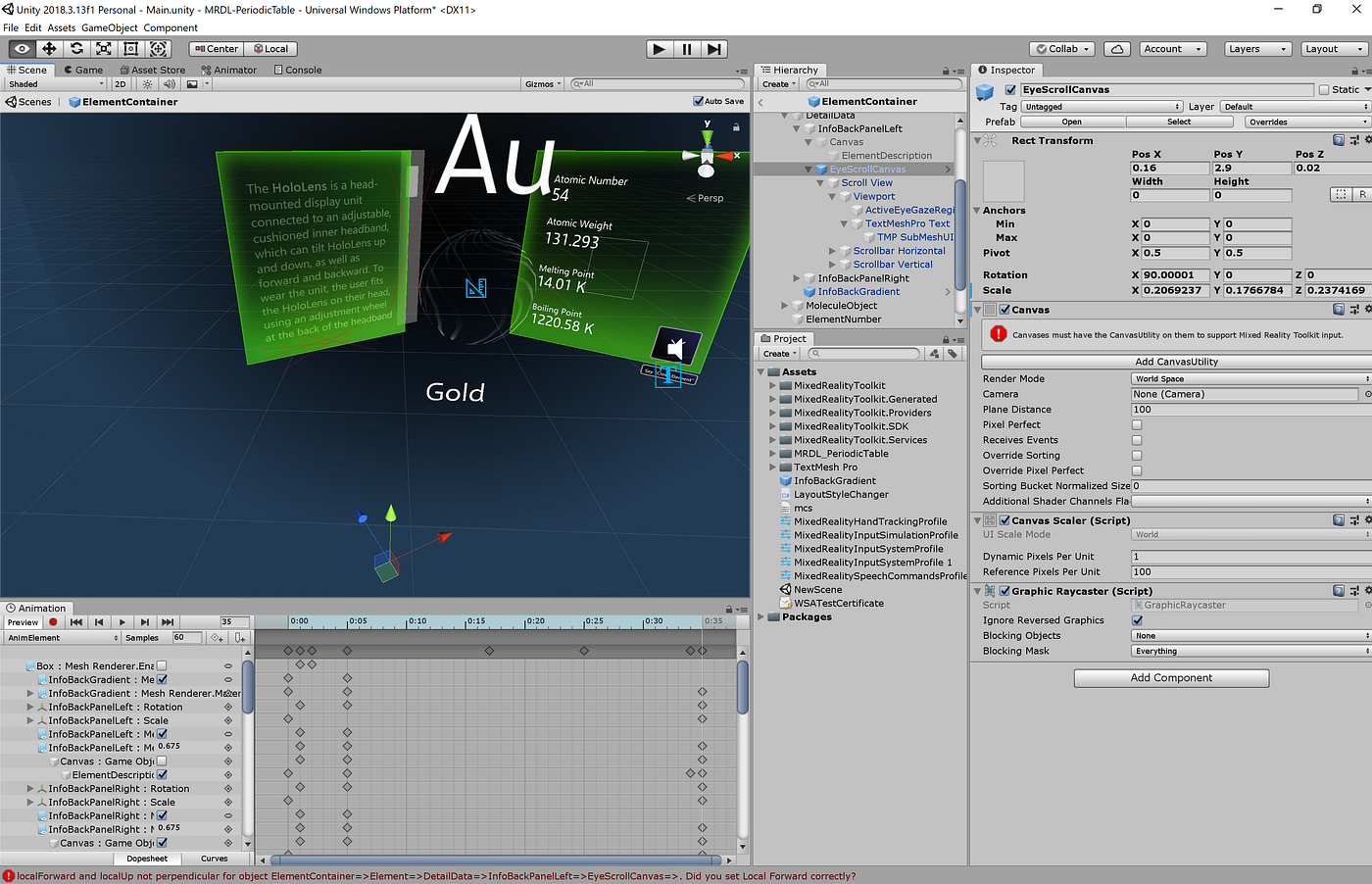

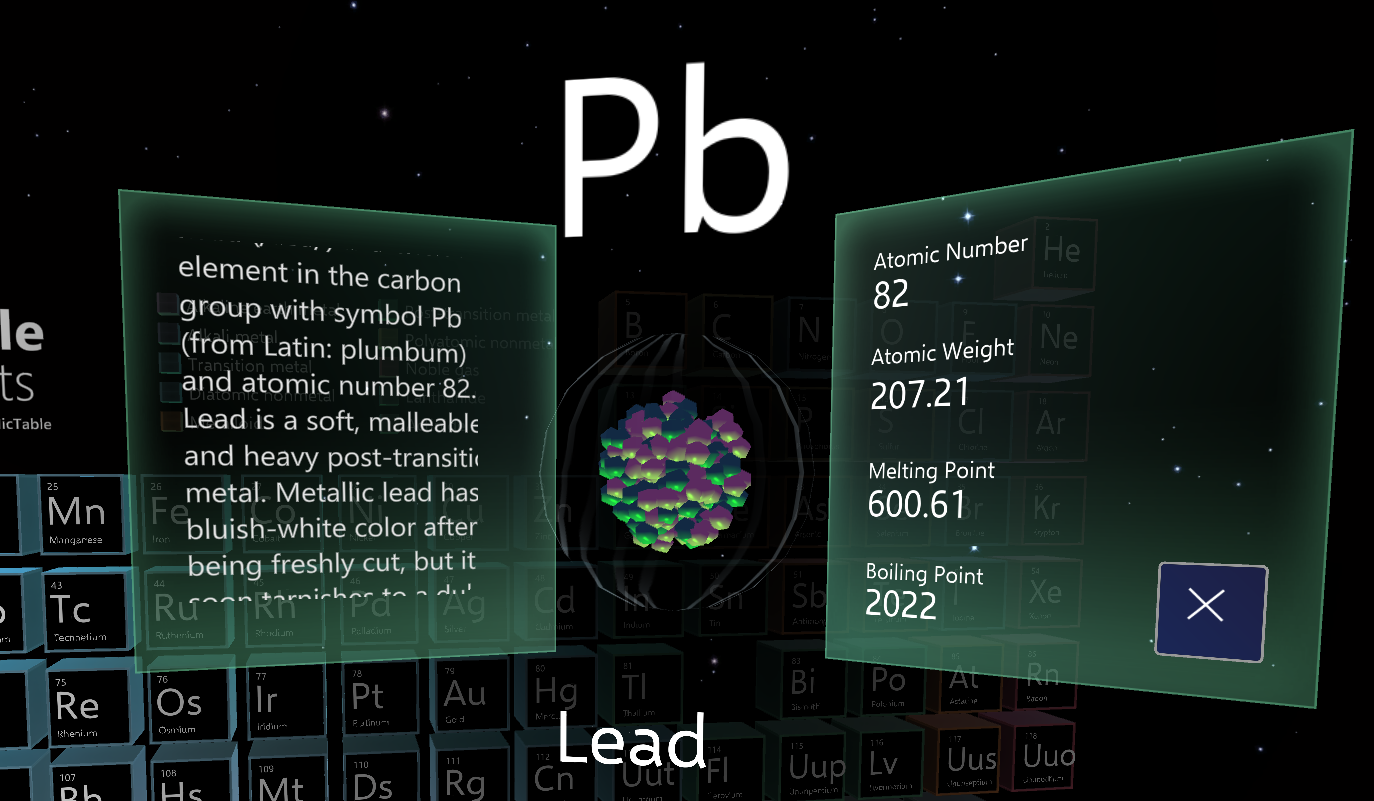

We published Mixed Reality Design Lab’s Periodic Table of the Elements app for HoloLens and immersive headset back in 2015 to demonstrate how to create an end-to-end experience using HoloToolkit’s building blocks. To fully leverage new articulated hand tracking and eye tracking input on HoloLens 2, we need to update HoloToolkit(HTK) to the newly released Mixed Reality Toolkit(MRTK) v2.

What is MRTK v2?

MRTK is a Microsoft-driven open source project. MRTK-Unity provides a set of foundational components and features to accelerate mixed reality app development in Unity. The latest Release of MRTK v2 supports HoloLens/HoloLens 2, Windows Mixed Reality, and OpenVR platforms.

Since MRTK v2 is completely redesigned from the ground up, foundational components such as input system are not compatible with HoloToolkit. Because of this, you need to put some effort to upgrade HoloToolkit to MRTK. In this story, I’ll show you how I updated HoloToolkit to MRTK and evolved the design to fully leverage hand tracking and eye tracking input.

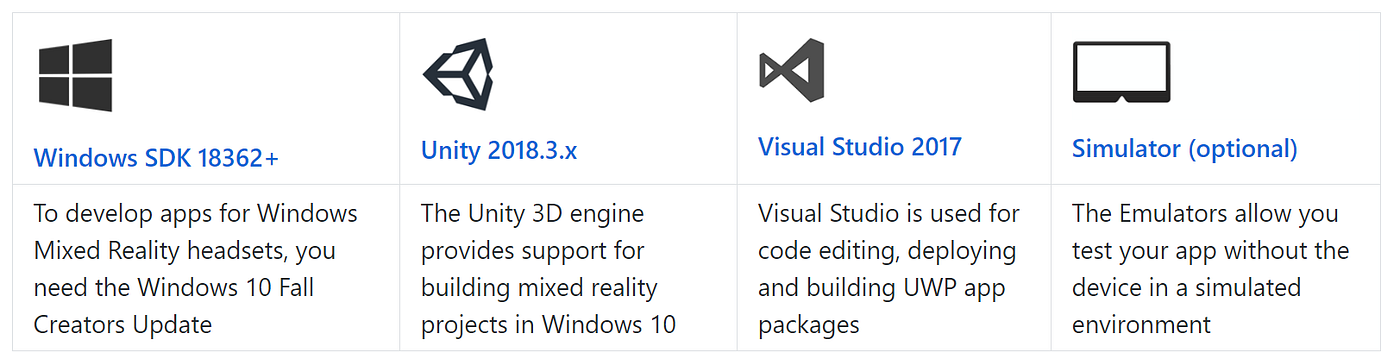

Required tools

You can find the latest information about the tools needed for HoloLens 2 development on this page:

https://docs.microsoft.com/en-us/windows/mixed-reality/install-the-tools

Getting new MRTK release packages

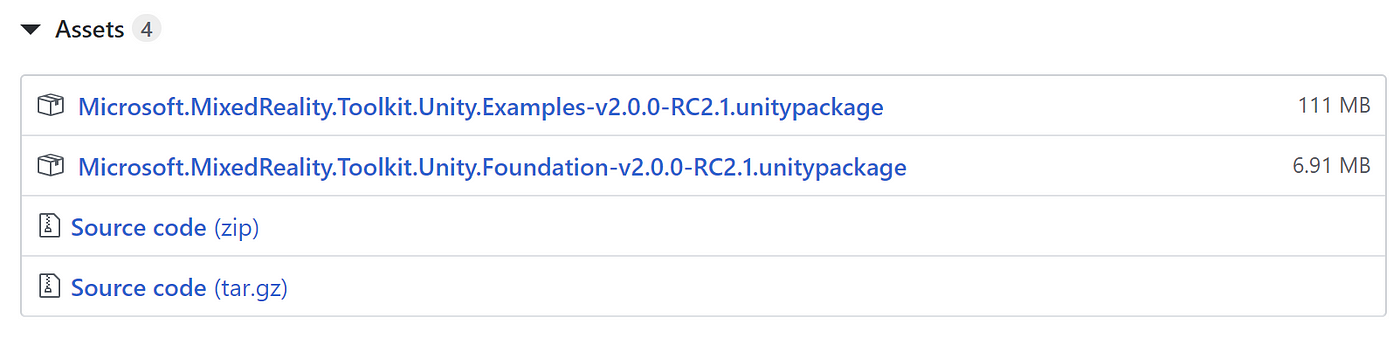

From MRTK GitHub repository, you can download MRTK release packages under ‘Release’ menu. You can also clone the repository. The branch name for the MRTK v2 is mrtk_release. If you want to get the latest features and components under active development, you can use the mrtk_development branch. However, please keep in mind that development branch could be unstable and something might be broken.

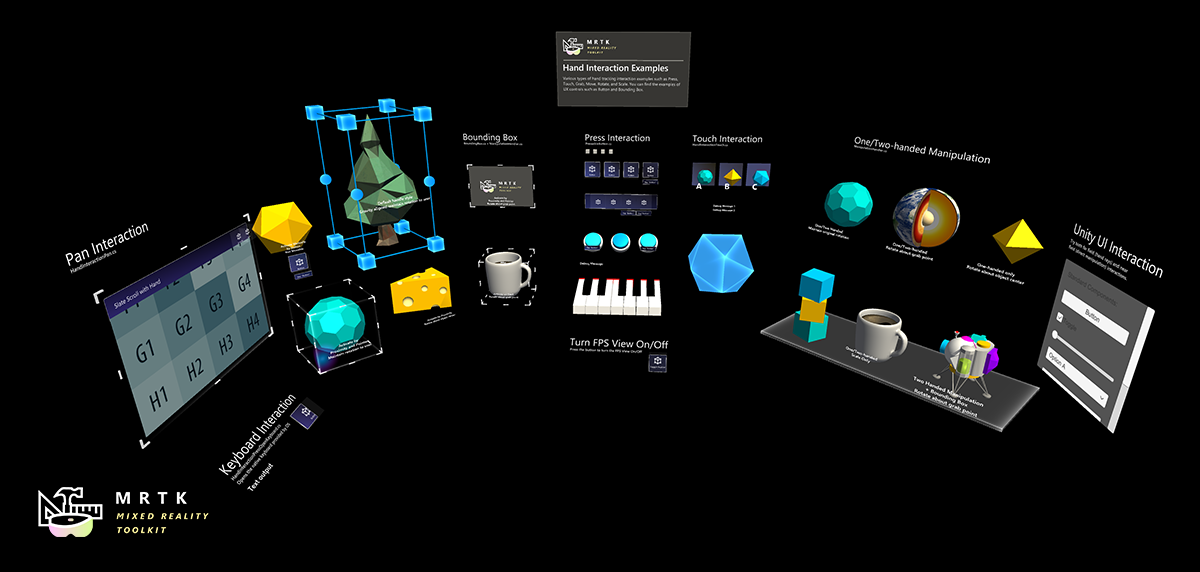

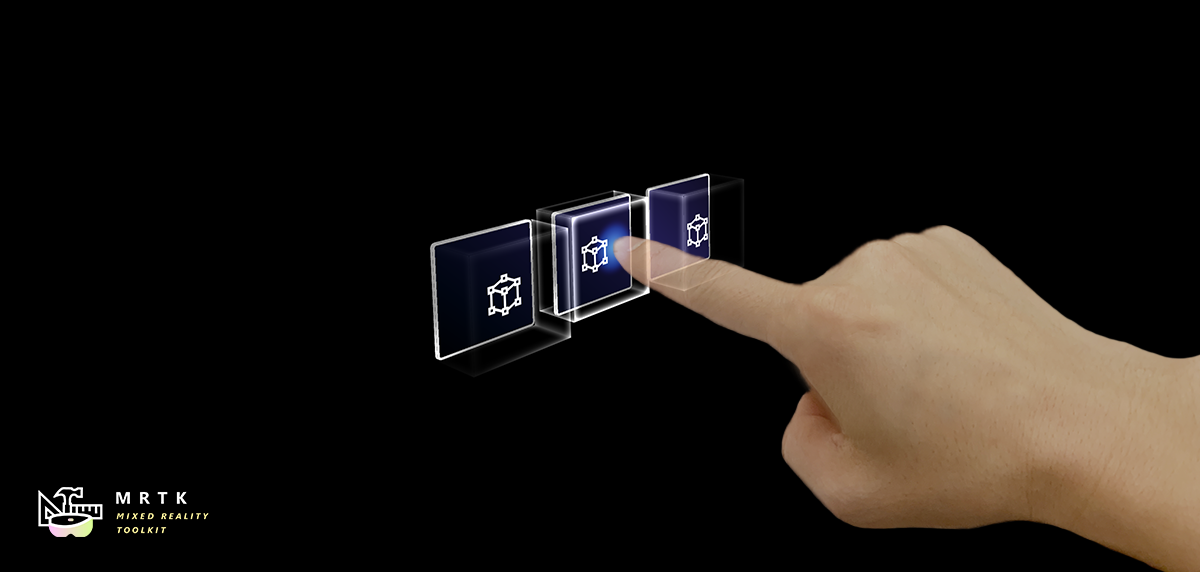

There are two types of release packages. Foundation and Examples. Foundation package has core features and input system of MRTK. Optional Examples package contains example scenes and scripts that demonstrate the use of core features in the Foundation package. In the example scenes, you will be able to play with various types of new interaction building blocks and UI components. Below is one of the example scenes you can find in the Examples package.

HandInteractionExamples.unity scene demonstrates various types of spatial UI and interactions for the hand tracking input.

Replacing the HoloToolkit with MRTK

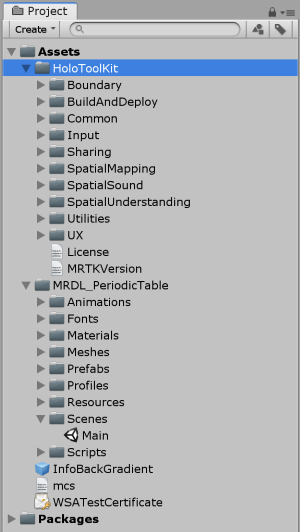

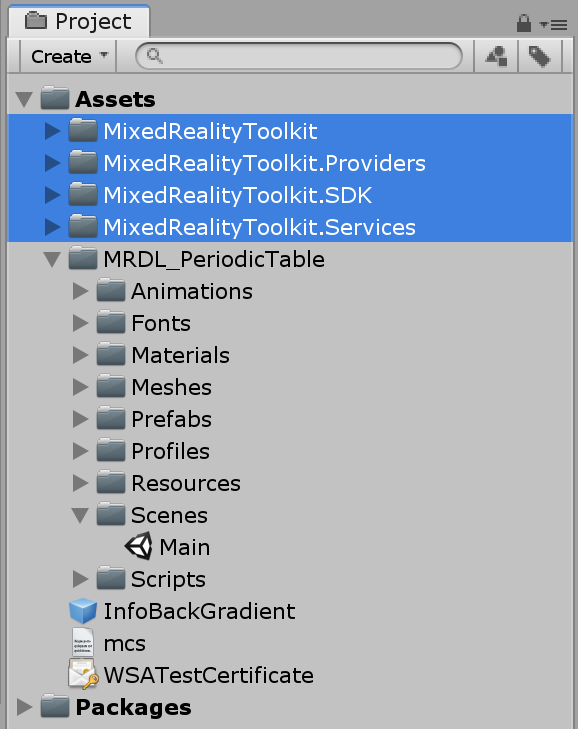

The original Periodic Table app project had HoloTookit folder with MRDL_PeriodicTable folder. Since the app specific scripts and contents are in MRDL_PeriodicTable folder, we can simply delete HoloToolkit folder.

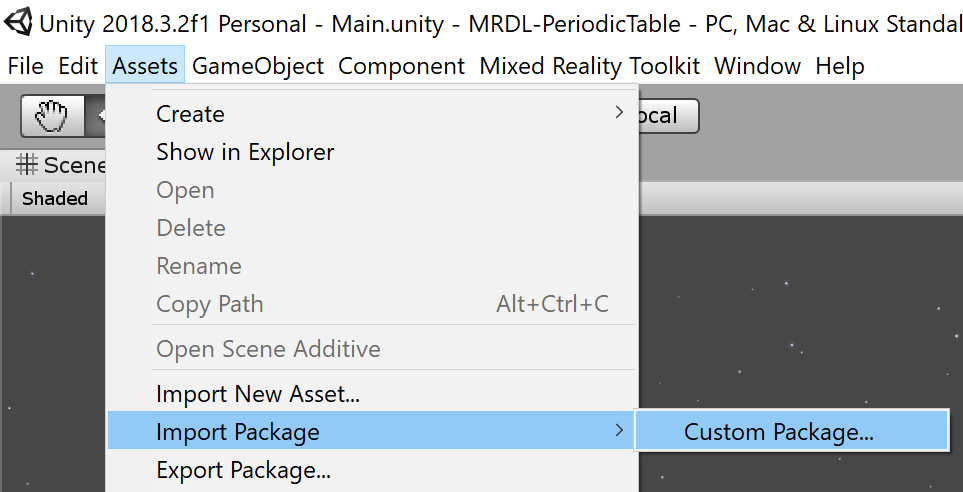

After deleting HoloToolkit folder, let’s import new MRTK packages through Assets > Import Package > Custom Package… menu.

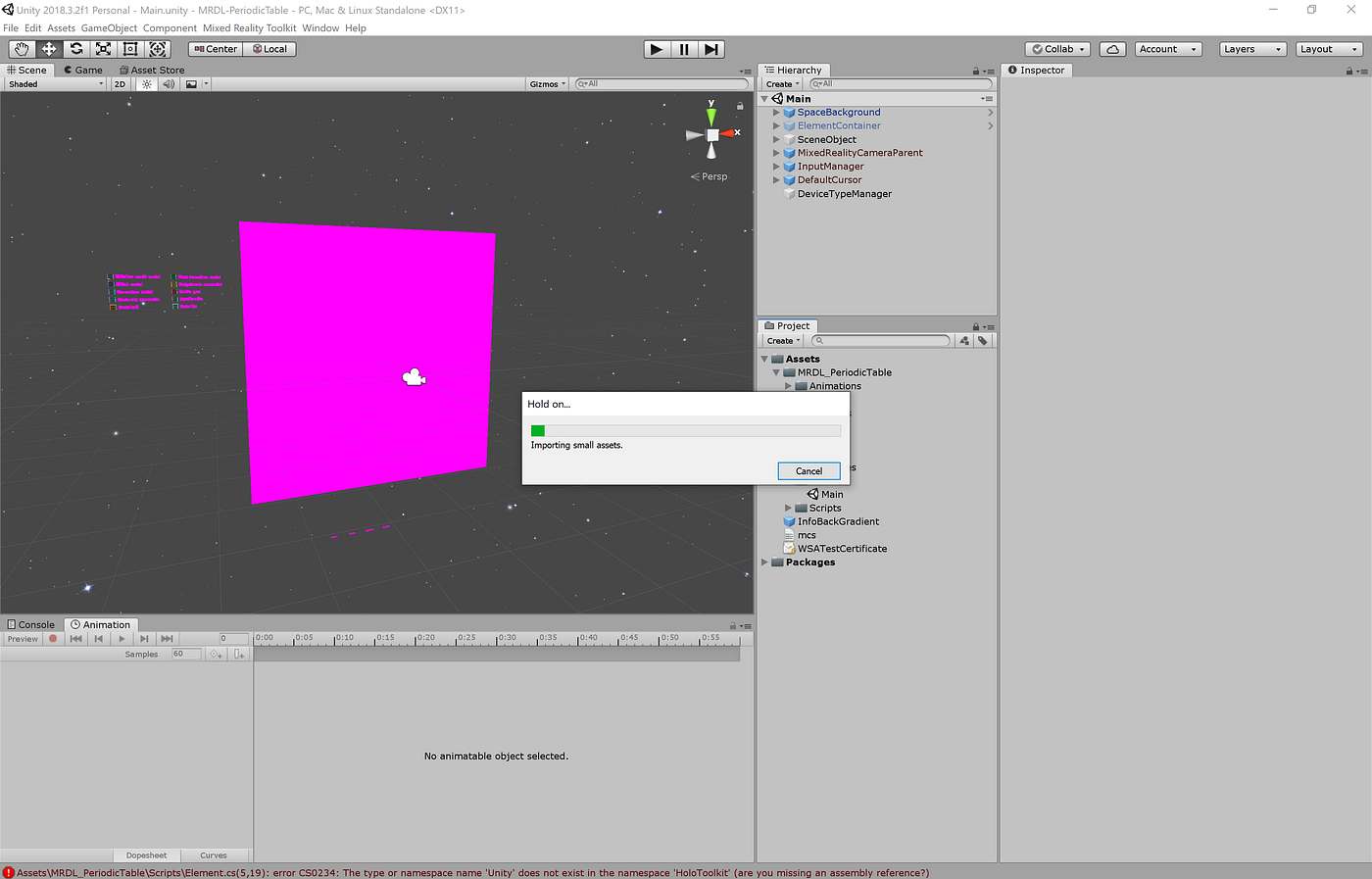

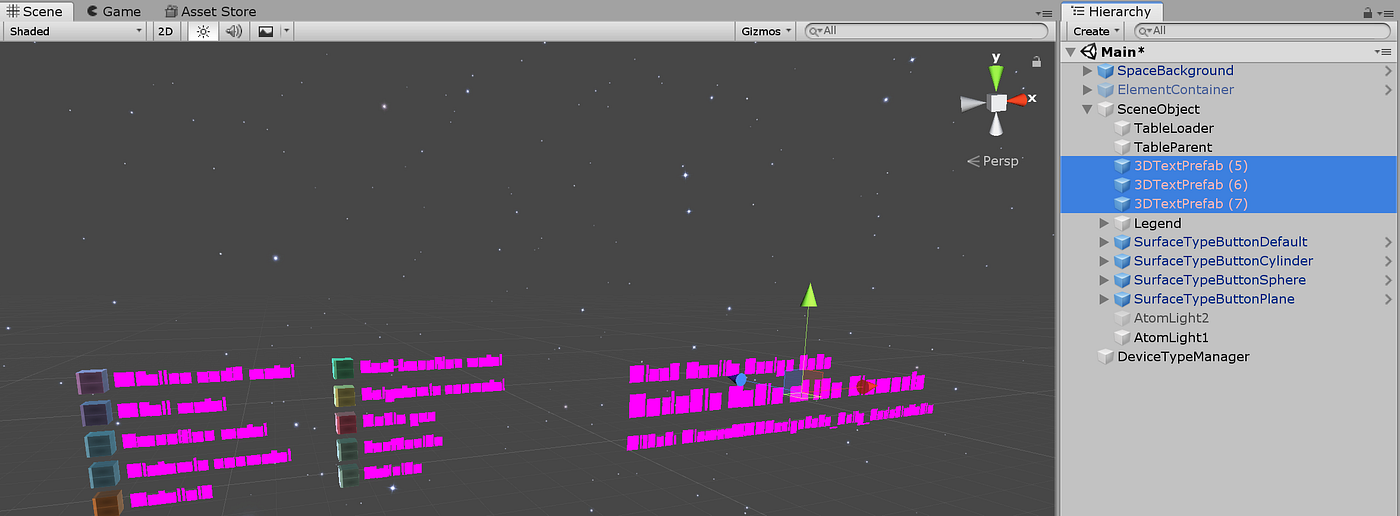

When you import new MRTK, a lot of things will look broken because the location of the shaders, materials, fonts have been changed. Also, you will see many error messages from the scripts.

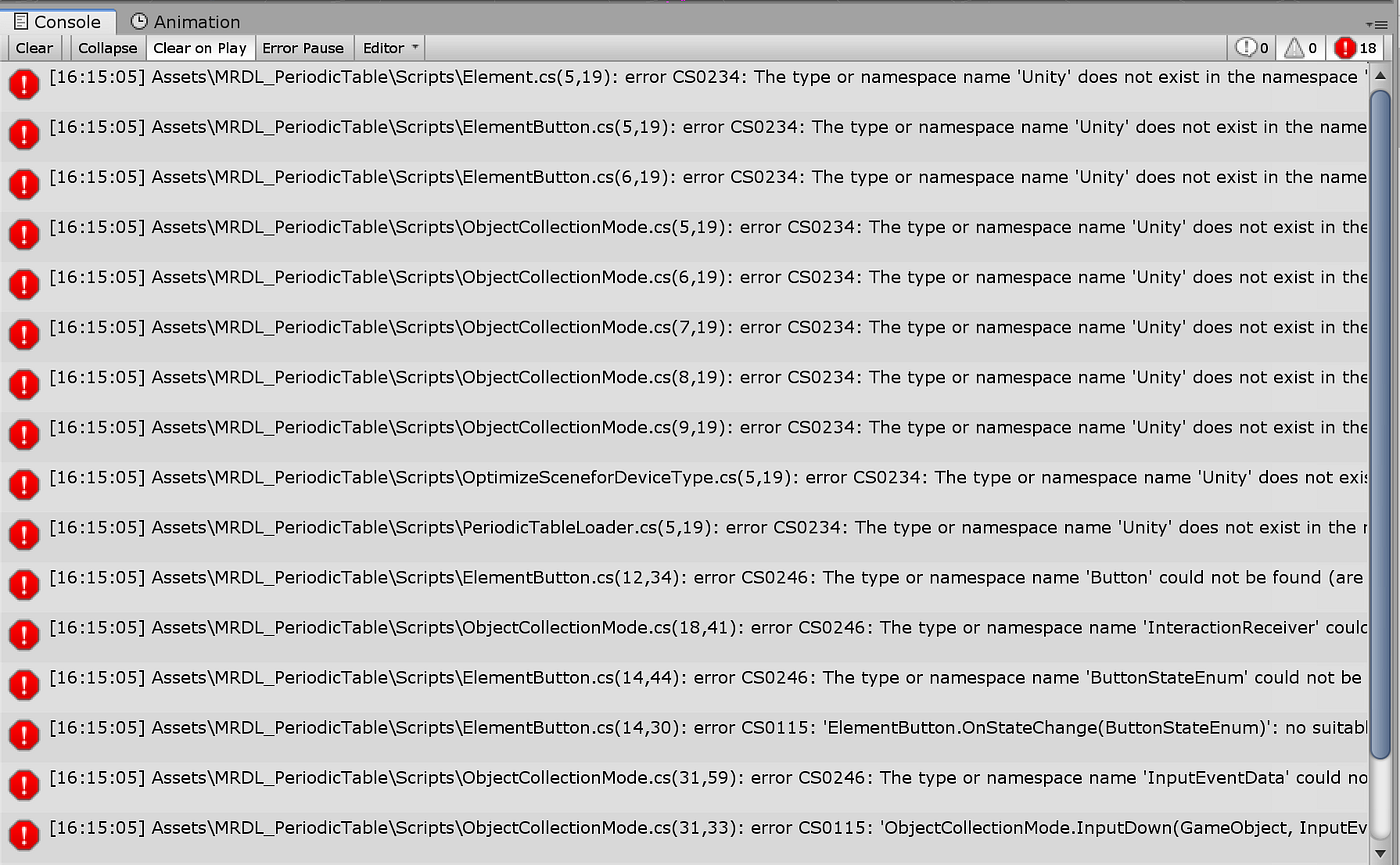

Resolving Script Errors

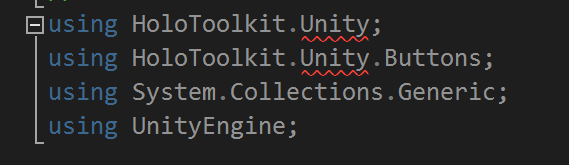

Namespace issues

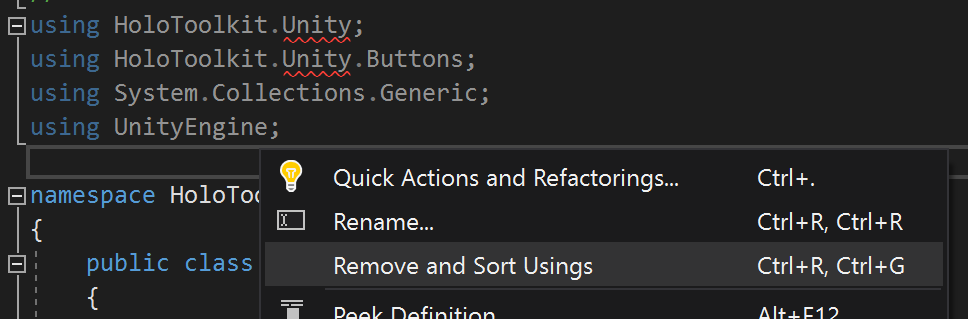

When you look at the script errors, you will find that most of them are about the namespace changes. Old HoloToolkit.Unity namespace lines could be easily cleaned up by using Visual Studio’s ‘Remove and Sort Usings’ menu.

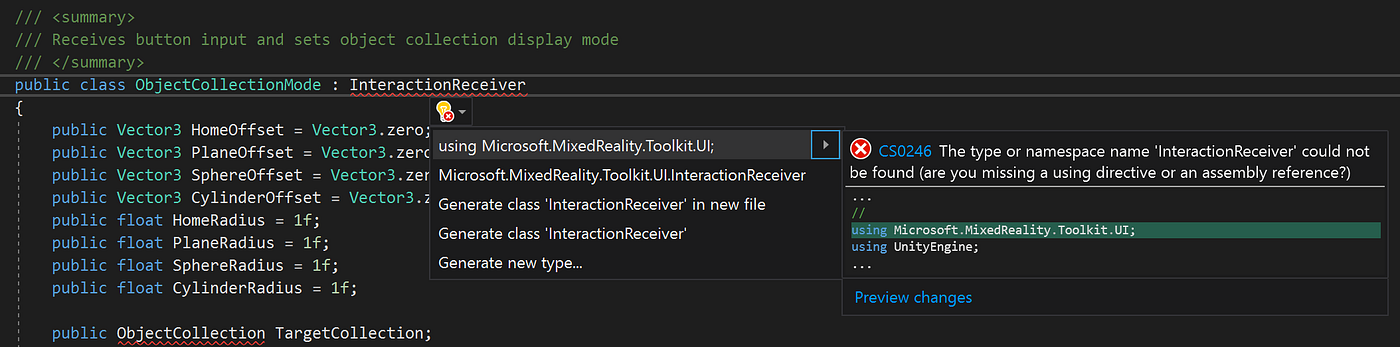

For missing classes and interfaces, you can use ‘Show potential fixes’ menu to add/update the proper reference. Below example shows adding Microsoft.MixedReality.Toolkit.UI for the InteractionReceiver

You can find detailed info on HoloToolkit to MixedRealityToolkit API/Component mapping table on this page: Porting Guide Upgrading from the HoloToolkit (HTK)

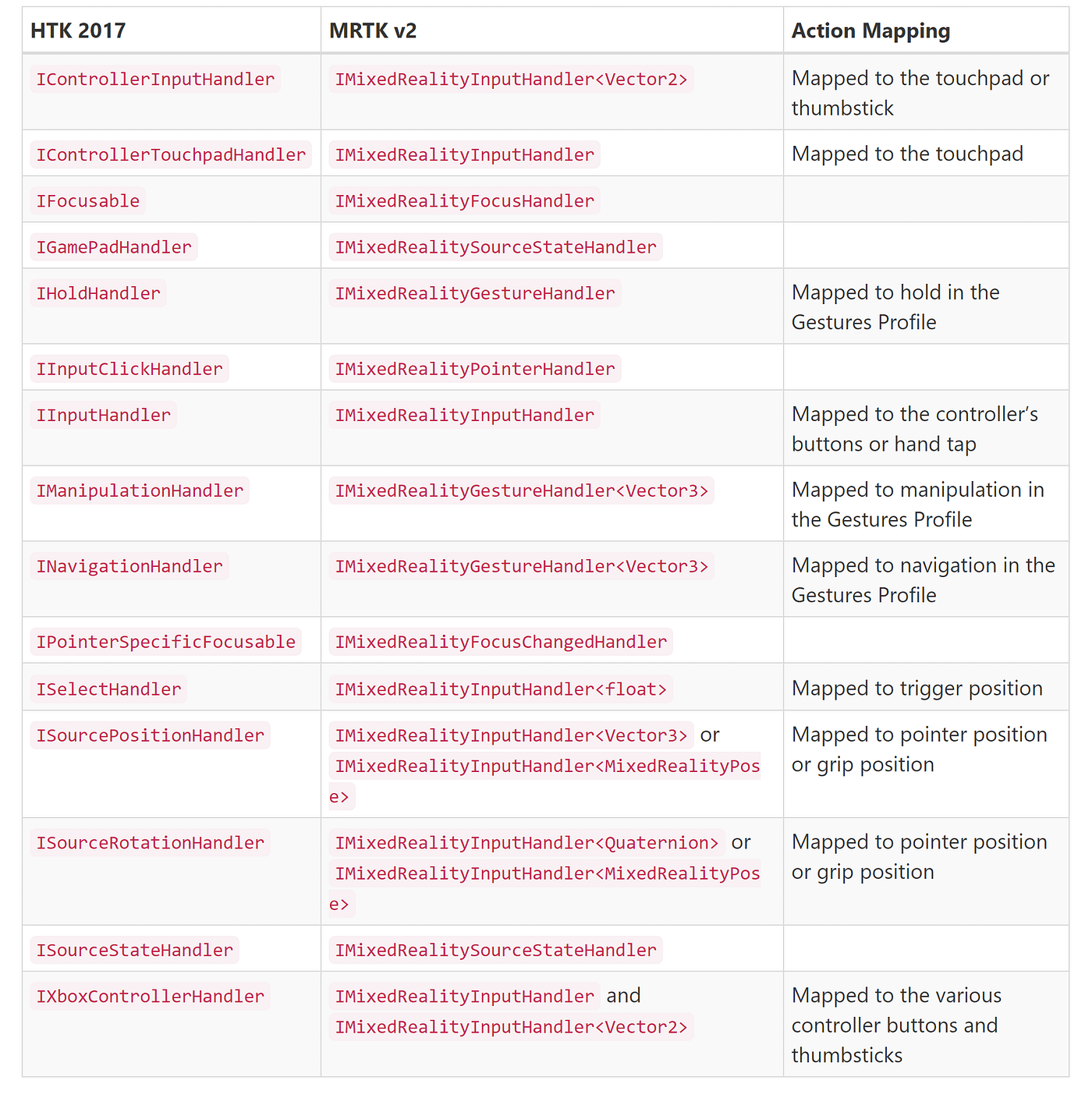

HTK-MRTK Mapping Table: Interface and event

Renamed/Replaced/Deleted Class and Feature issues

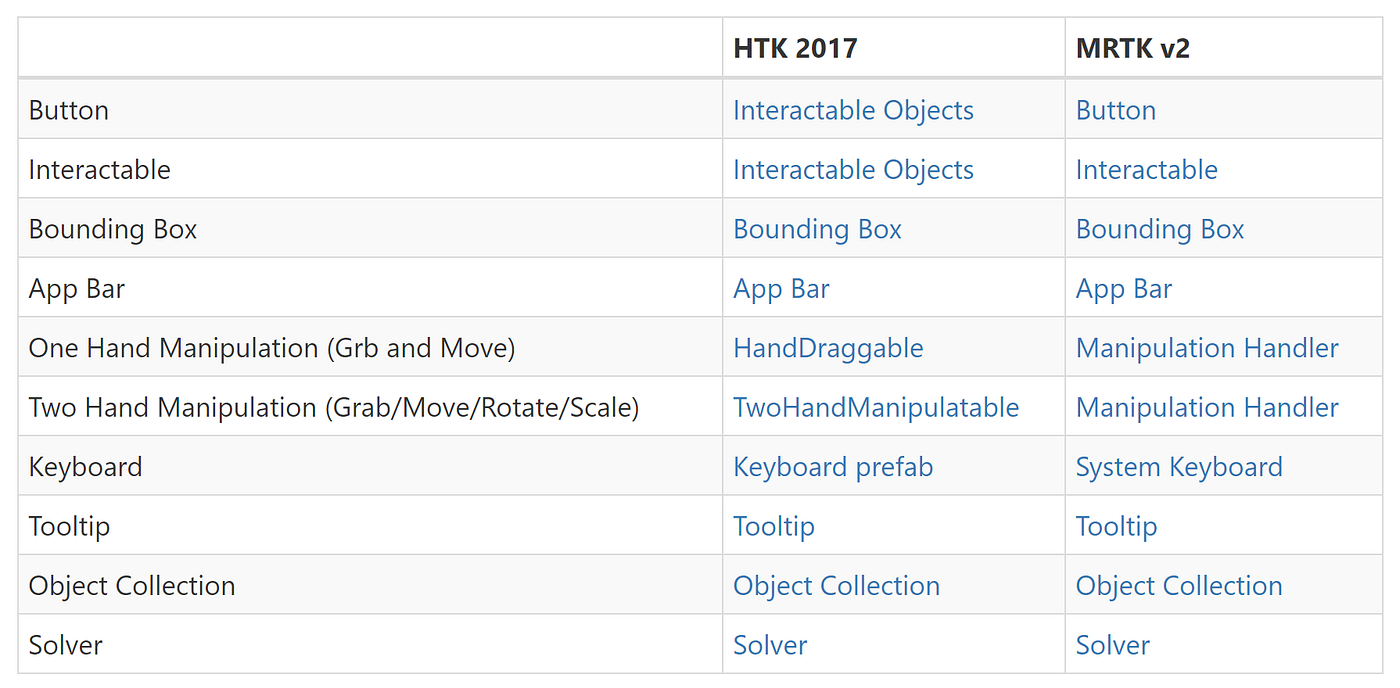

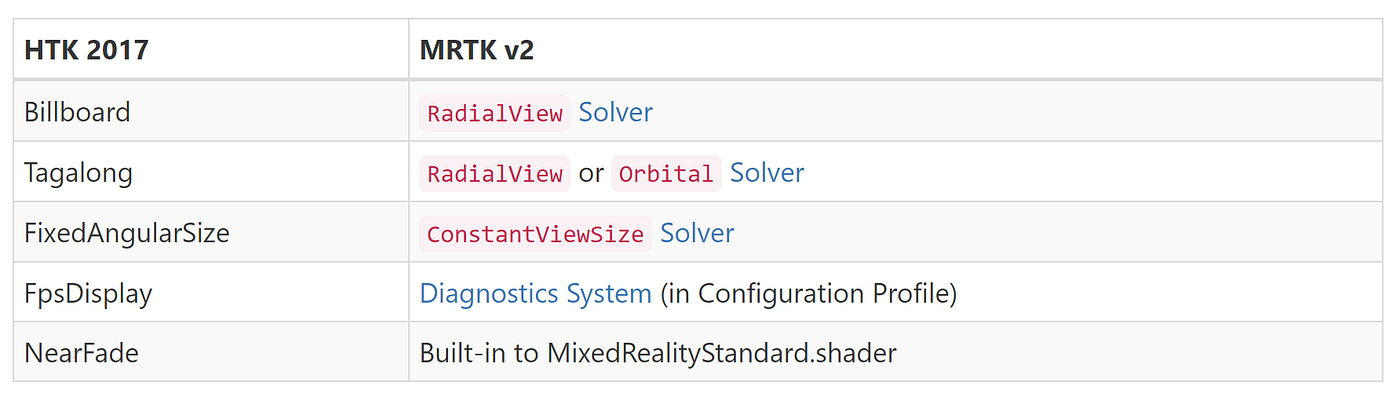

Most of the core features and building blocks have been either ported or upgraded for MRTK v2. You can find the mapping table for the UX controls and Utilities in the Porting Guide page.

HTK-MRTK Mapping Table: UX controls

HTK-MRTK Mapping Table: Utilities

Resolving Prefab/Material/Shader errors

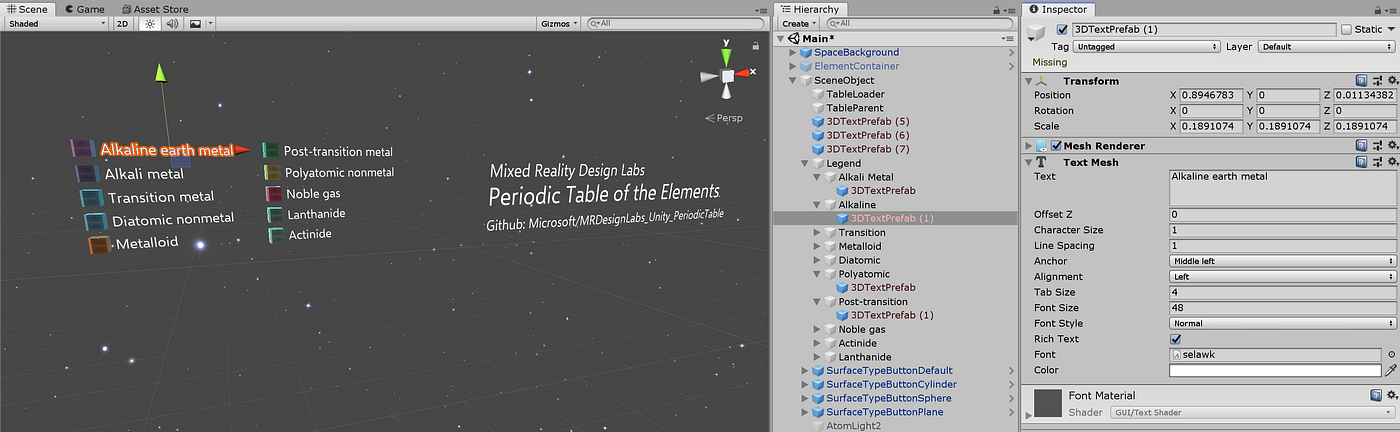

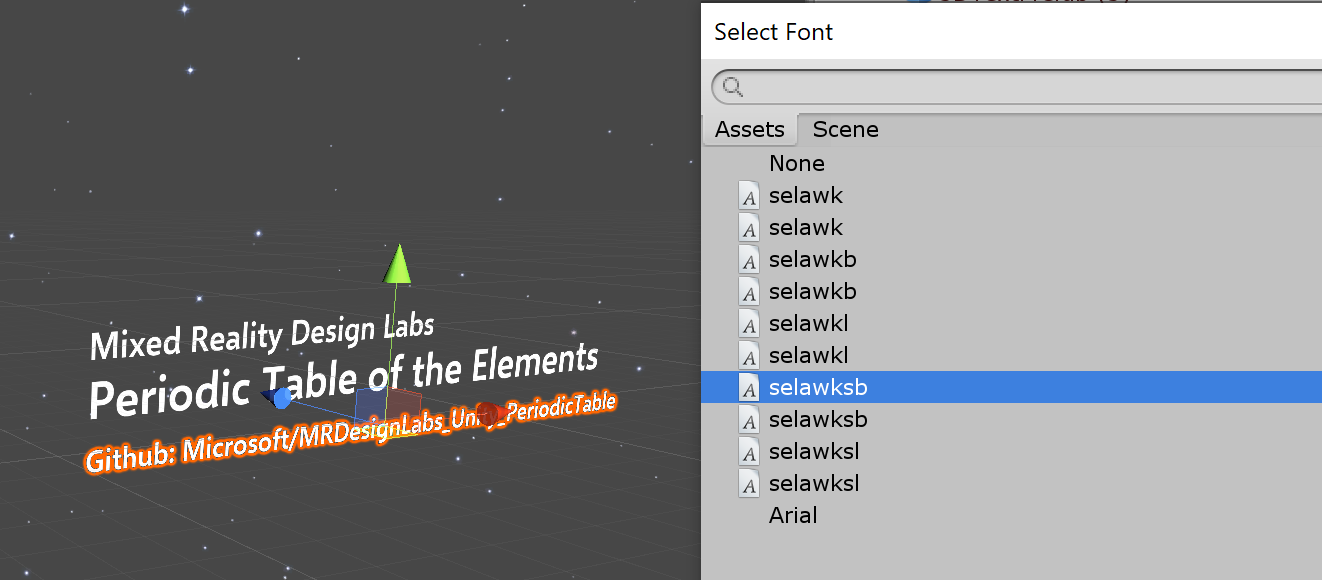

The first thing we can easily clean up is the material/shader errors. Because of the location change, you need to re-assign font texture/materials. Periodic Table app uses the font Selawik series which is one of the Microsoft’s open-source fonts. The new location of the font files and textures in MRTK v2 is:

MixedRealityToolkit/StandardAssets/Fonts

MixedRealityToolkit/StandardAssets/Materials

The materials in this folder for Selawik font support proper occlusion.

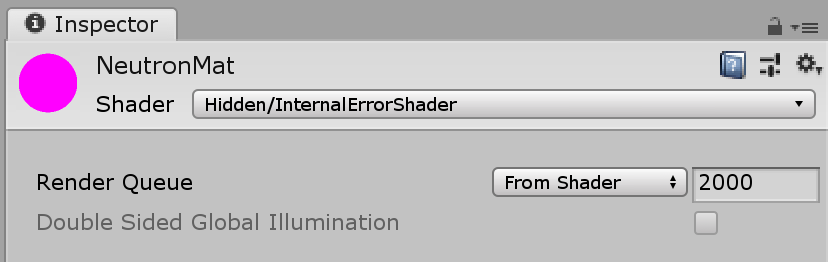

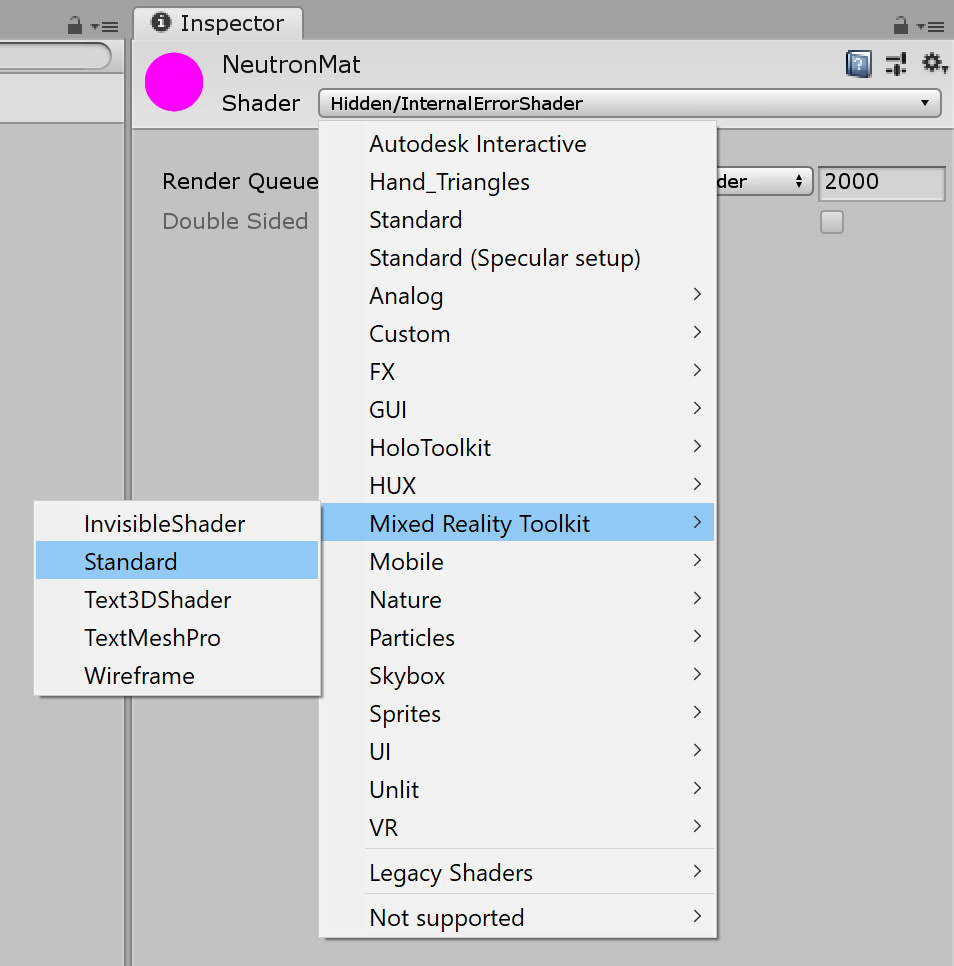

You will also find some of the materials using old HoloToolkit’s shader broken, showing Hidden/InternalErrorShader. You can simply re-assign Mixed Reality Toolkit > Standard from the Shader drop-down menu.

Building blocks used in the Periodic Table app

Foundational Scene Components: Camera, InputManager, Cursor

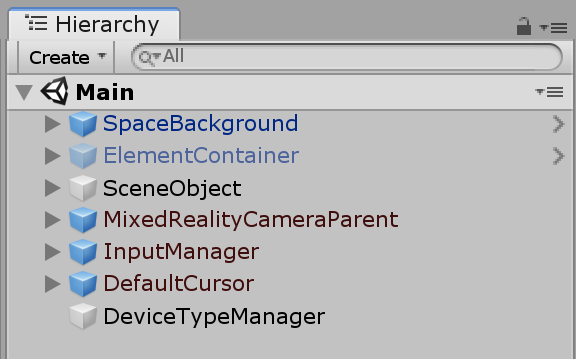

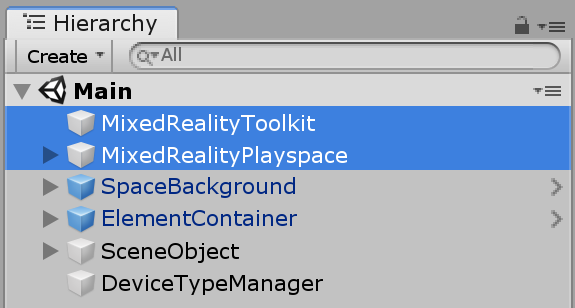

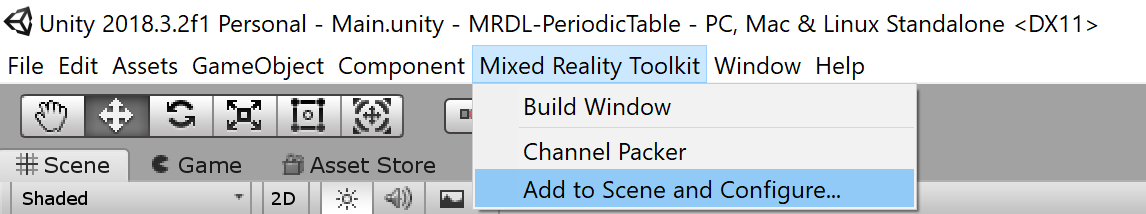

In the Scene Hierarchy, HoloToolkit had three basic components: MixedRealityCamera, InputManager, and Cursor. In MRTK, they are replaced with MixedRealityToolkit and MixedRealityPlayspace.

To upgrade these basic scene components, simply delete old ones and use the menu Mixed Reality Toolkit > Add to Scene and Configure…

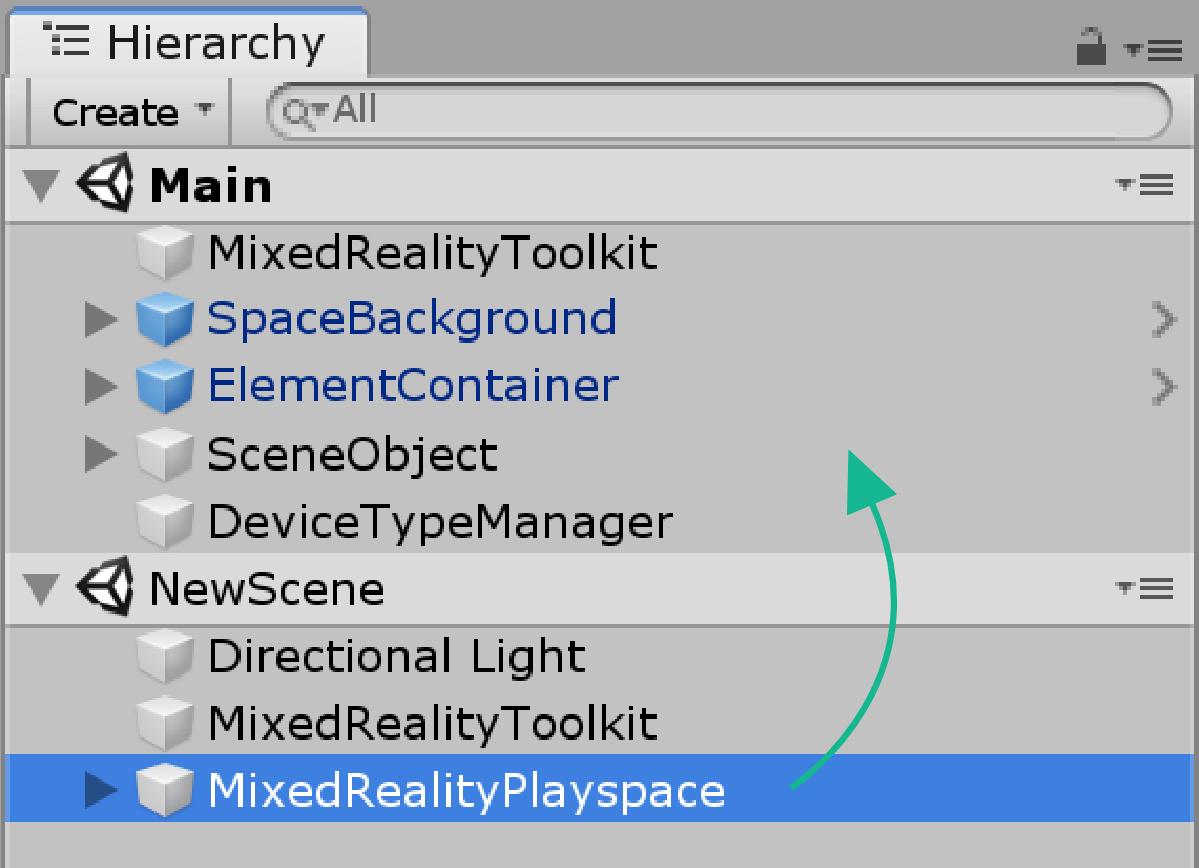

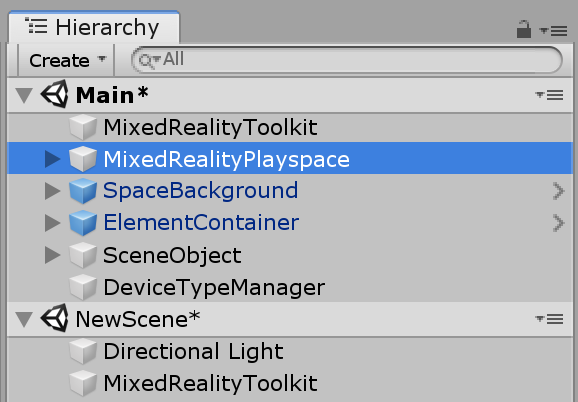

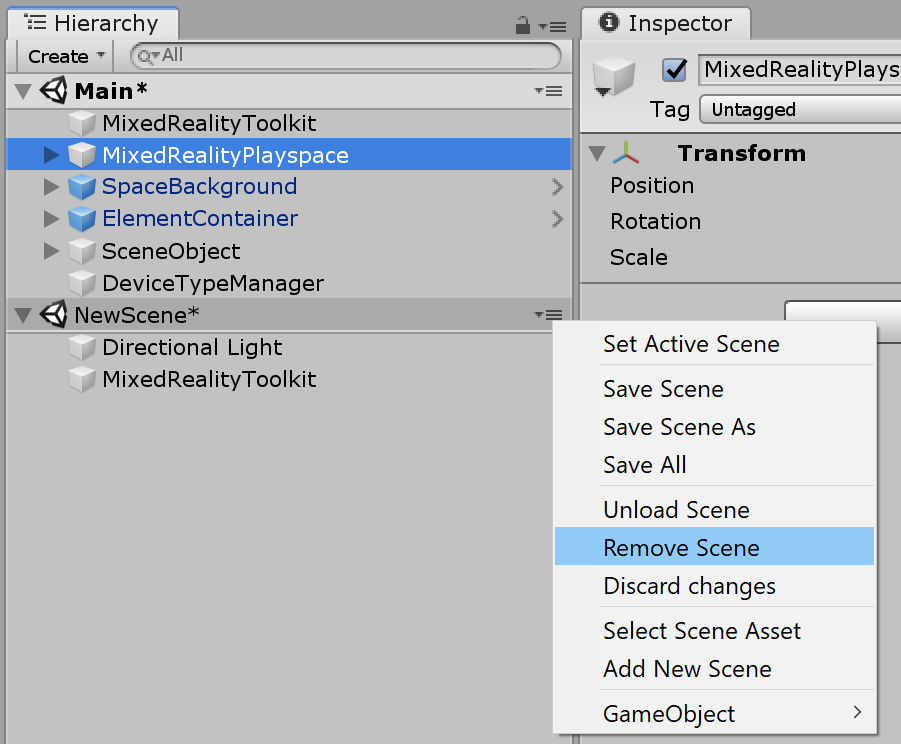

Sometimes, MixedRealityPlayspace might not be added through this menu. In this case, you can create a new scene, add components using the menu, then drag and drop these components into your existing scene. (You can drag and drop other scene files into the opened scene)

Running the app in Unity Editor with input simulation

MRTK v2 supports in-editor input simulation. Using the keyboard and mouse, you can test your app for the hand tracking and eye tracking input. Space bar gives you the right hand and the left shift key gives you the left hand. T and Y key make them persistent. By dragging with the mouse right button, you can control the camera. Using W, A, S, D keys, you can move.

See MRTK’s Input Simulation Service documentation for more details.

Button

Compound Button script series was used in Element and surface type buttons to provide visual feedback for different types of interaction states and trigger actions. In MRTK v2, Compound Button has been replaced with Interactable. With Interactable, you can easily add visual feedback to the different input states such as default, focus and pressed. You can also use input events to trigger actions.

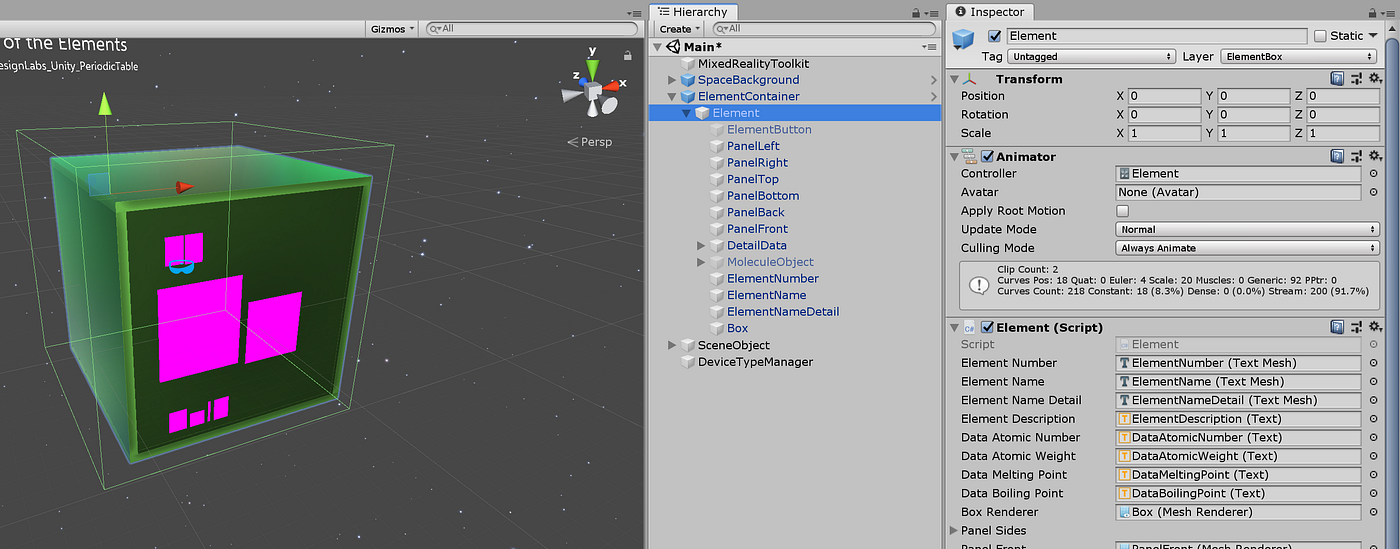

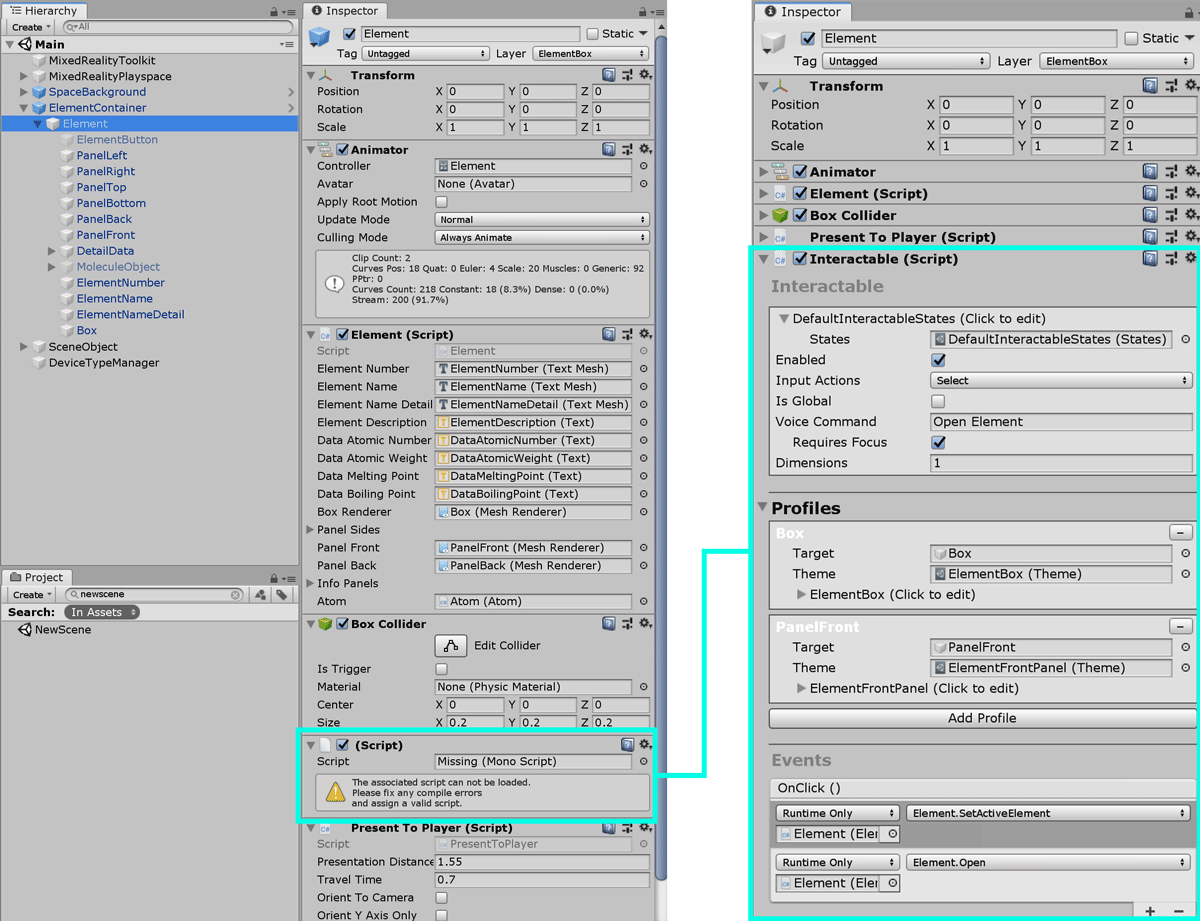

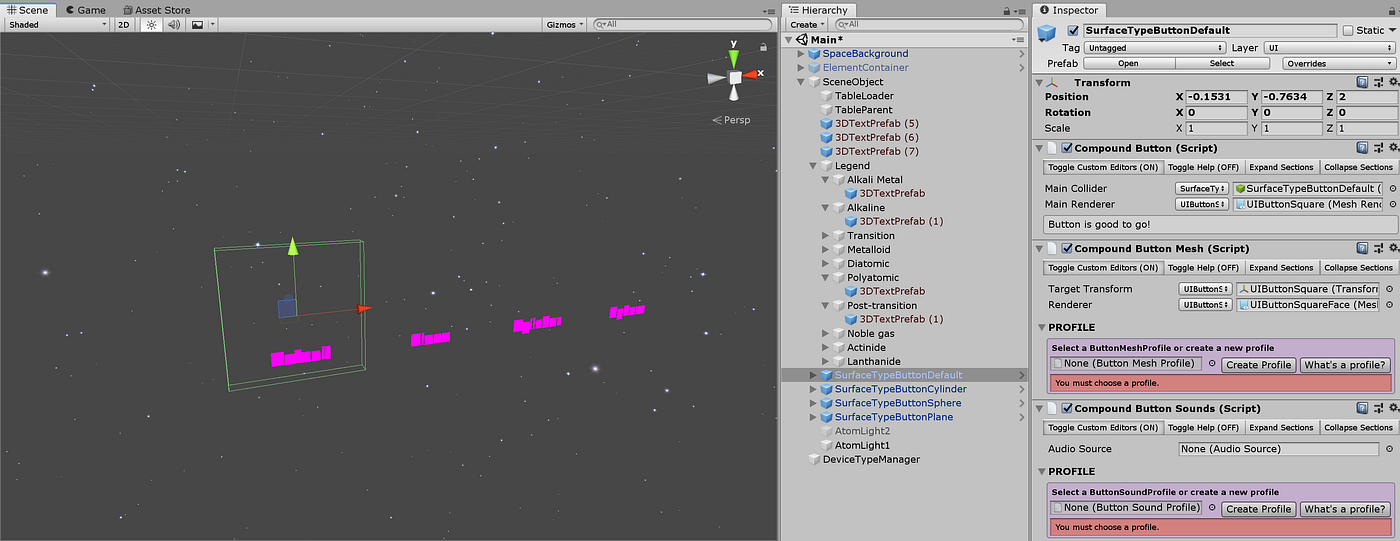

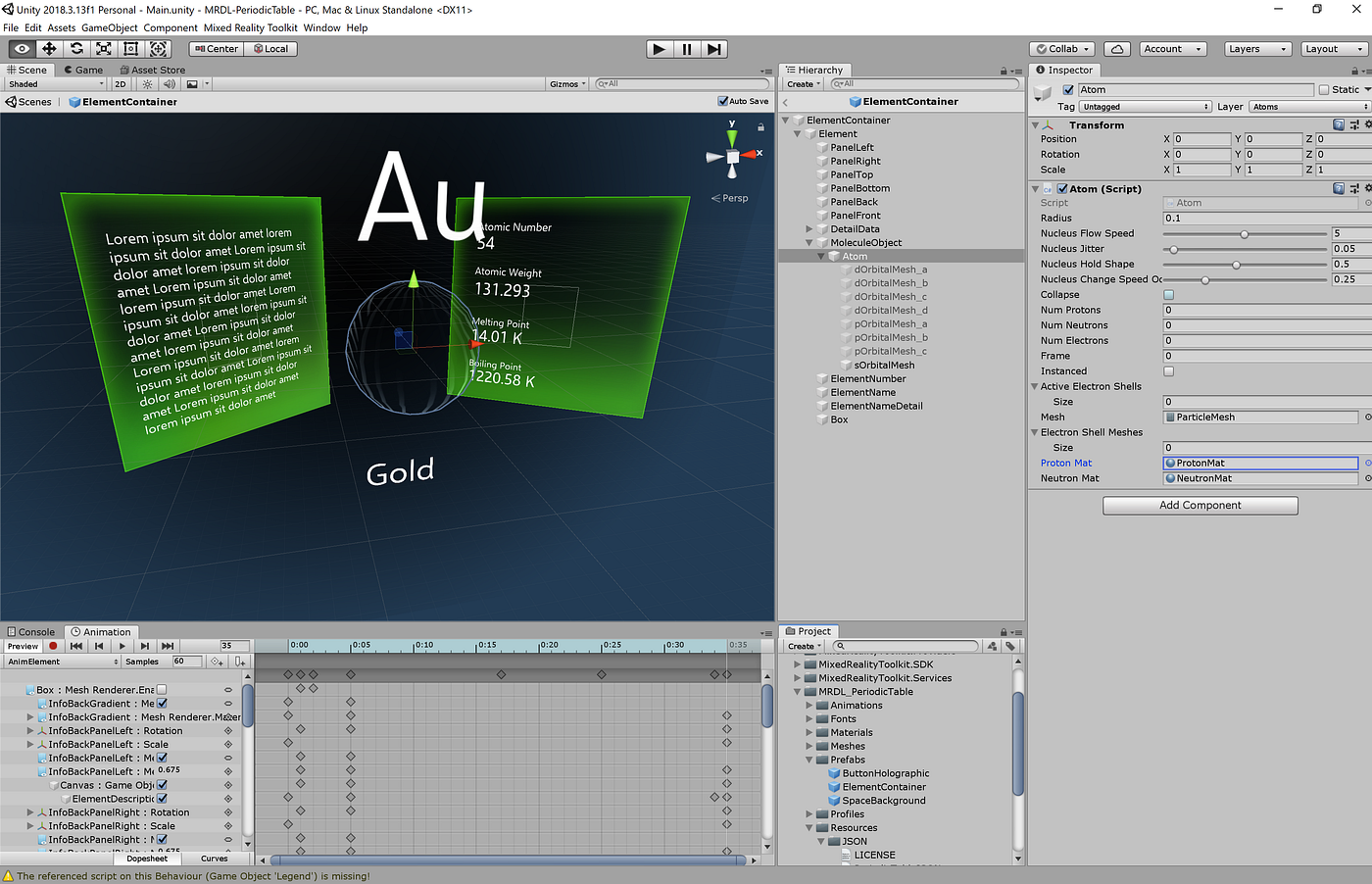

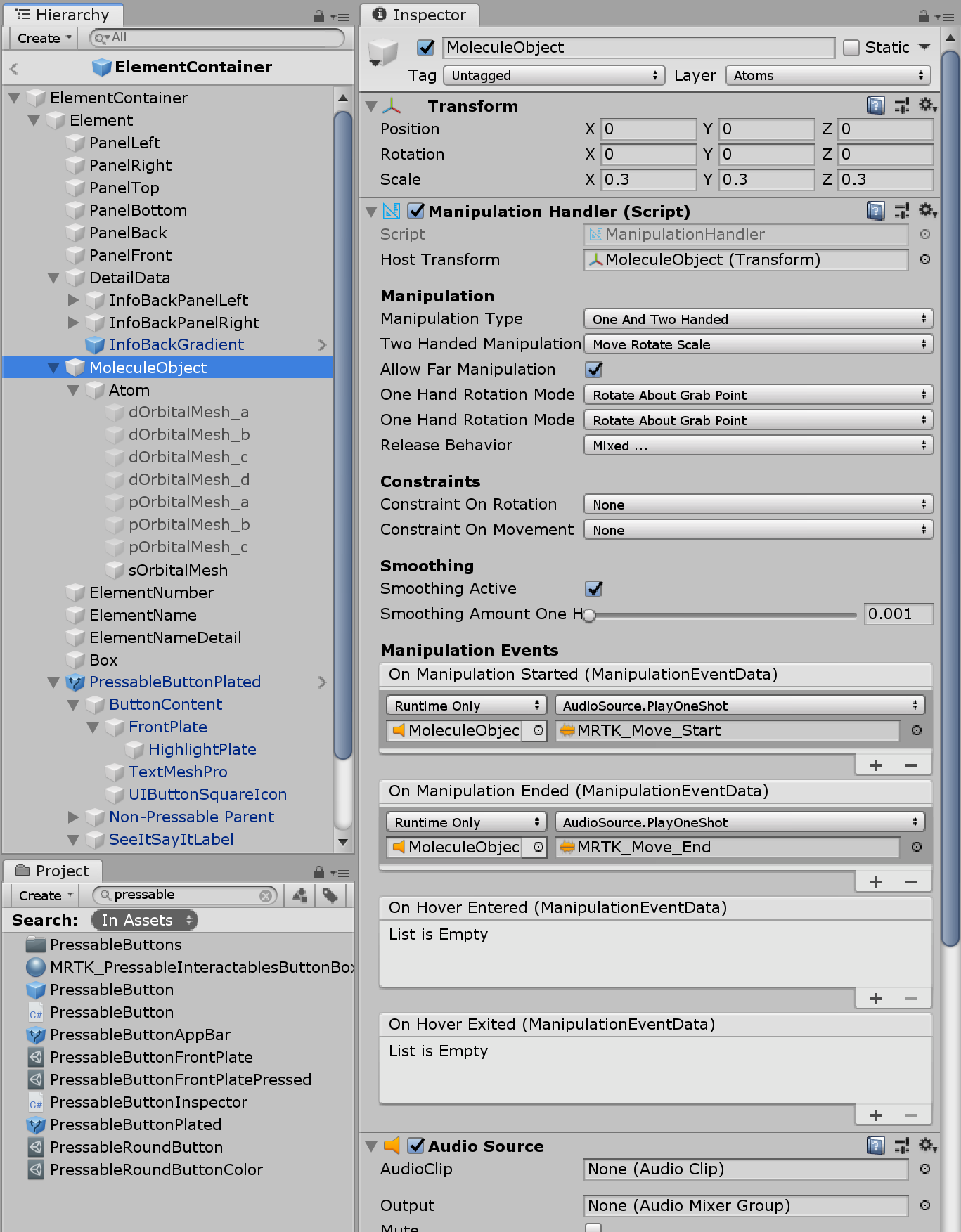

ElementContainer is the cube-shaped prefab which contains the template for the element model, information layout and open/close animations. The entire box acts as a button. In the Element object under ElementContainer, I have removed old ElementButton script and added Interactable.

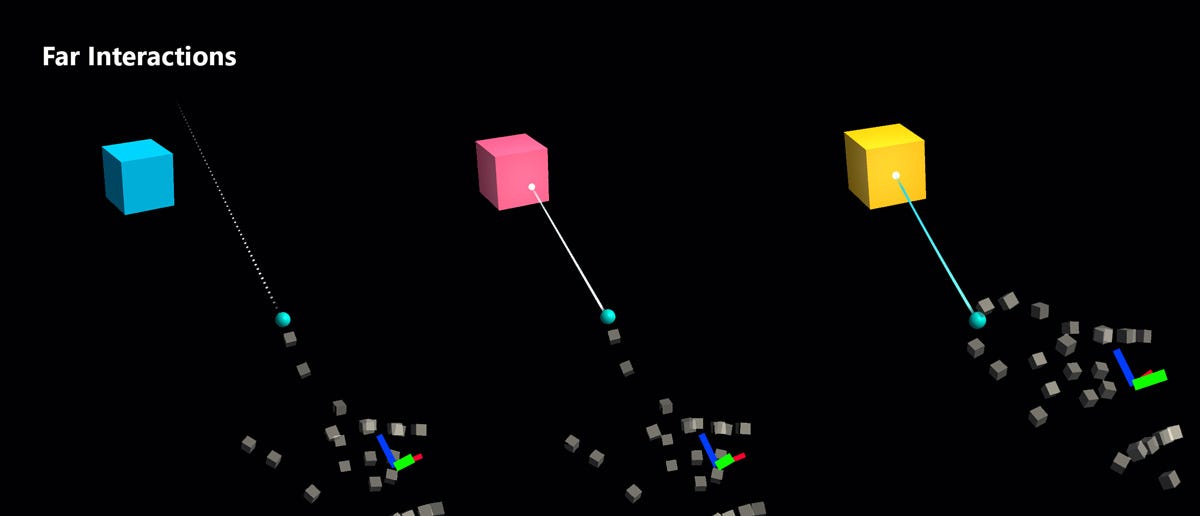

Interactable handles visual states and event trigger for the far interaction inputs such as Gaze + Air-tap, Hand ray, and motion controller pointer. Since we want to keep the far interaction model for the element targeting and selection, we will use Interactable.

See Interactable object design guideline for more details.

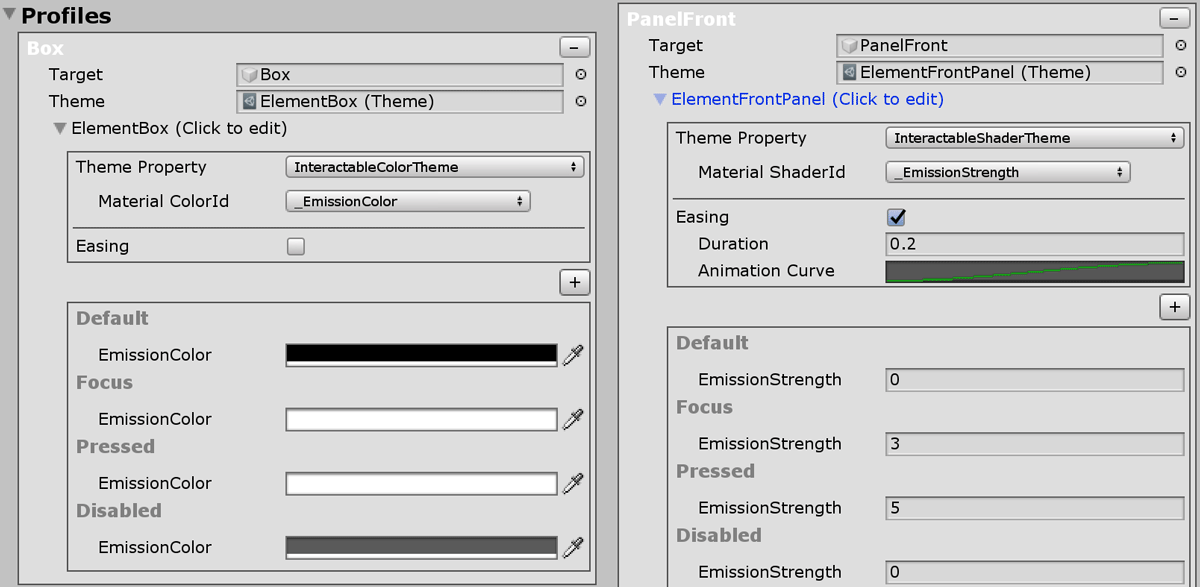

In the Interactable, I have created a new profile ‘ElementBoxTheme’ with the Theme Property ‘ScaleOffsetColorTheme’ and Target object ‘Box’ (which is the outer shell of the element object). With this, we can easily define color, scale, and offset values for the different input states — Default, Focus, Pressed, and Disabled.

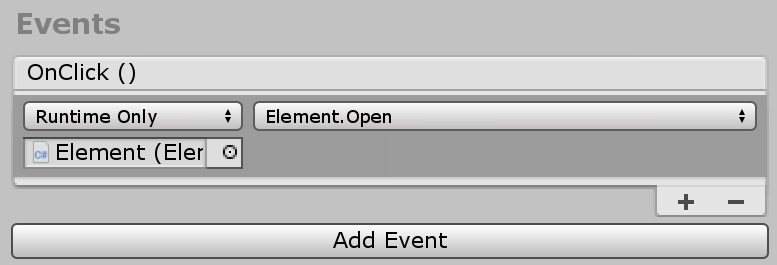

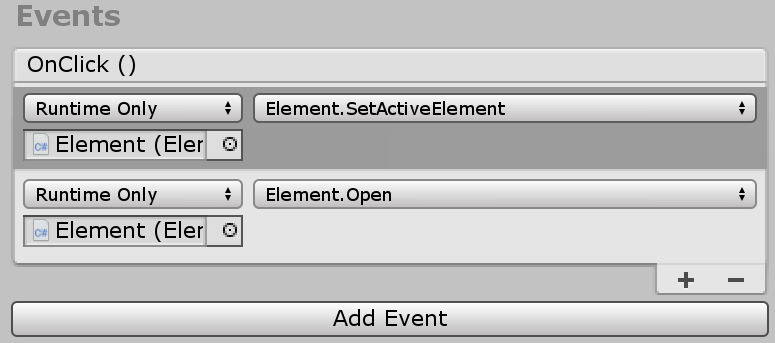

In the Interactable, you can also easily add actions for the various types of events. The Open() method in the Element.cs script triggers the animation, opens the element box, and shows the 3D model with information on the panels. SetActiveElement() is used together to specify currently opened element.

public void SetActiveElement()

{

Element element = gameObject.GetComponent<Element>();

ActiveElement = element;

}public void Open()

{

if (present.Presenting)

return;

StartCoroutine(UpdateActive());

}

Under the Events section of the Interactable, I have added two method calls by dragging and dropping the Element script and using the drop-down.

Surface type buttons were made with HolographicButton prefab in HoloToolkit. The HolographicButton prefab has been updated as well, from CompoundButton to Interactable. Additionally, new PressableButton and PressableButtonPlated prefab have been added which supports direct manipulation with articulated hand tracking input. With PressableButton, you can press the button with your fingertip. PressableButton also uses Interactable.

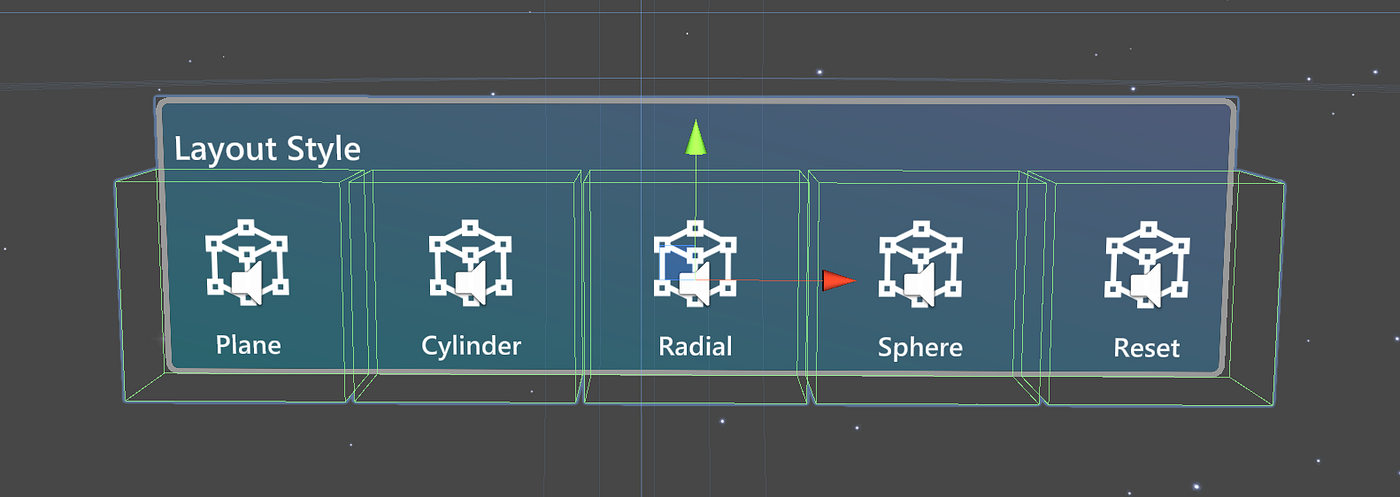

To use direct hand interaction, I have removed the old surface type buttons and added new PressableButtonPlated prefabs.

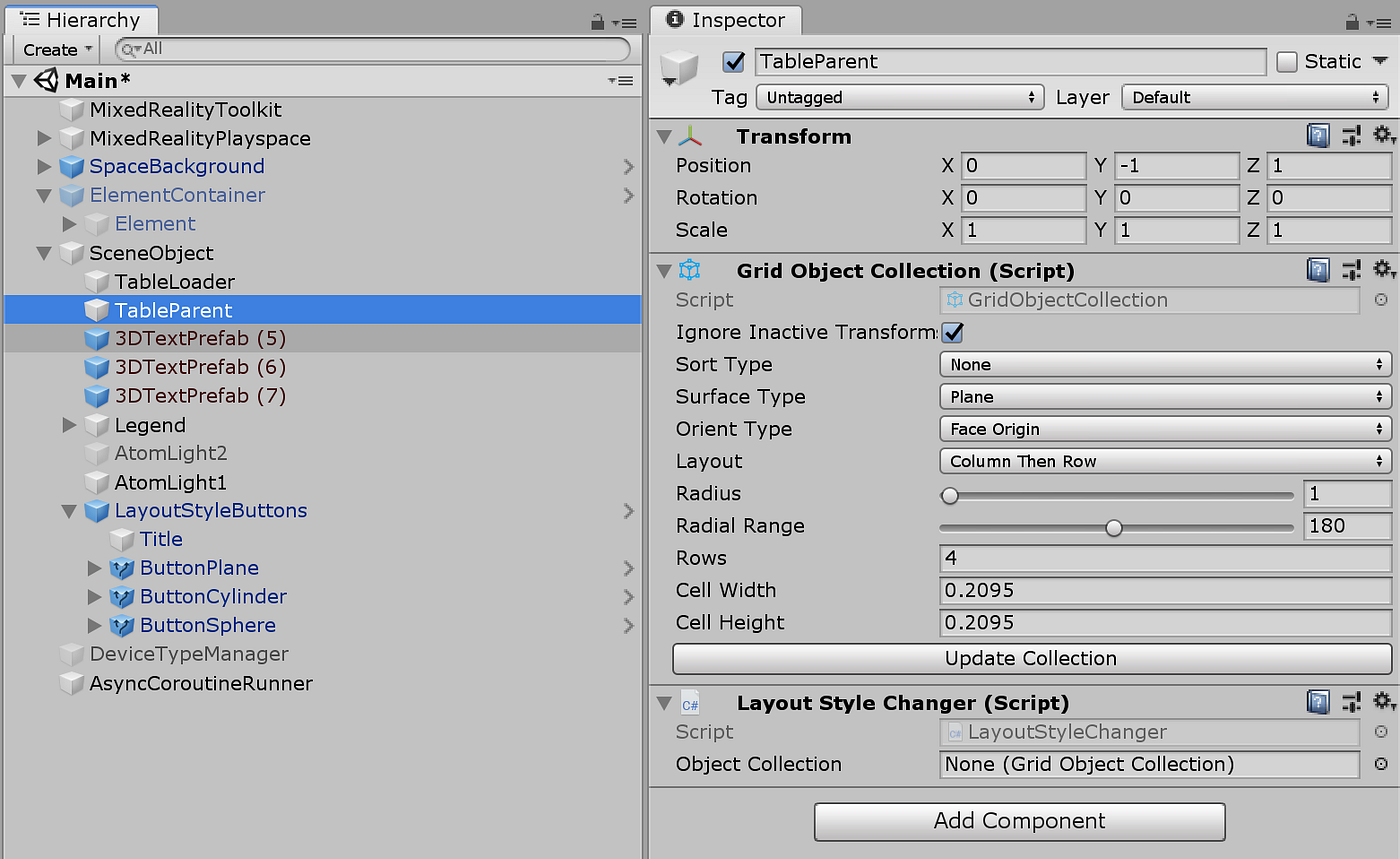

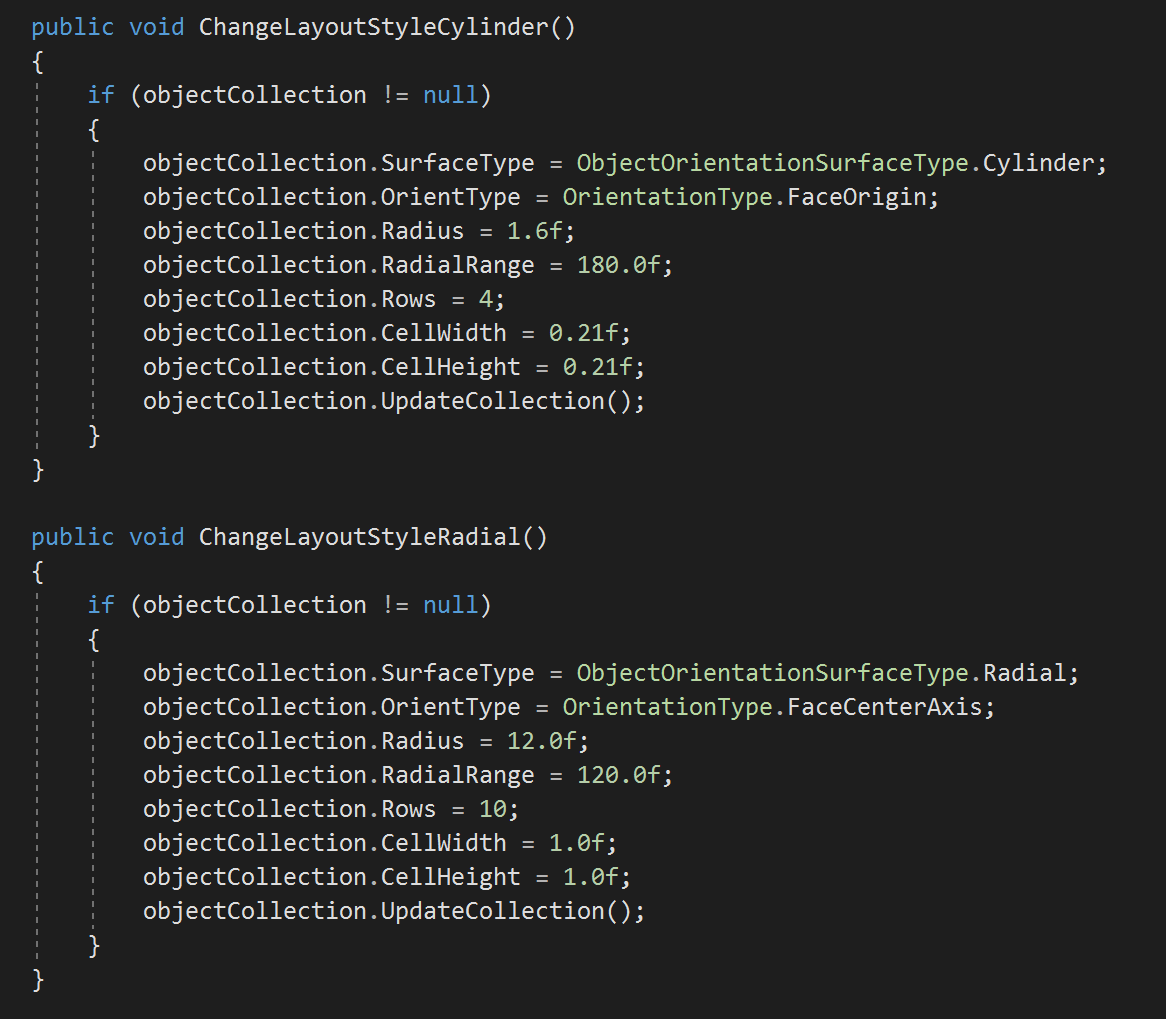

These buttons will update the layout style by changing the Grid Object Collection’s Surface Type. (Explained in the next section below)

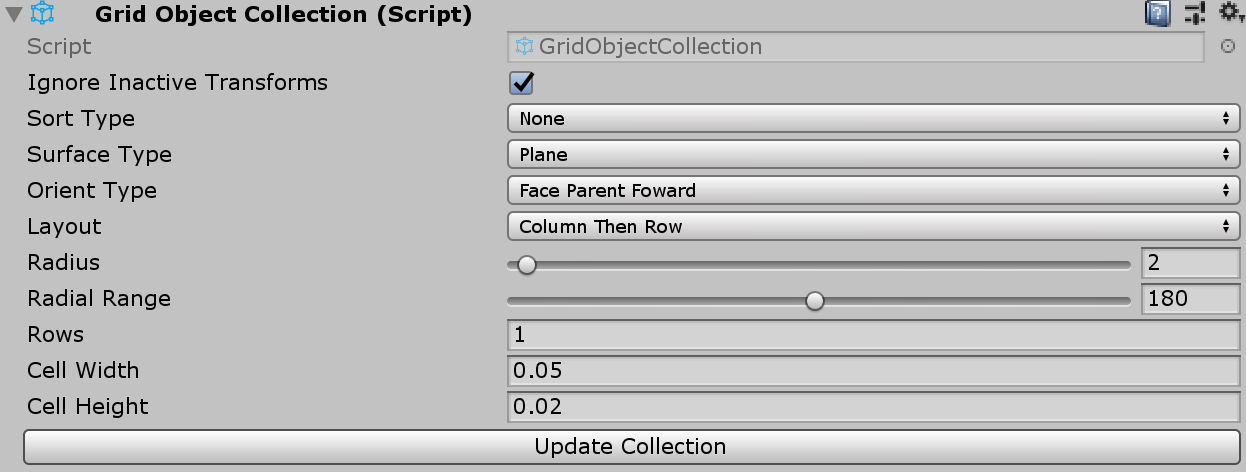

Grid Object Collection is also useful when you want to layout multiple buttons. Instead of manually calculating the button size and putting the coordinates, use Grid Object Collection and adjust Rows and Cell Width/Height.

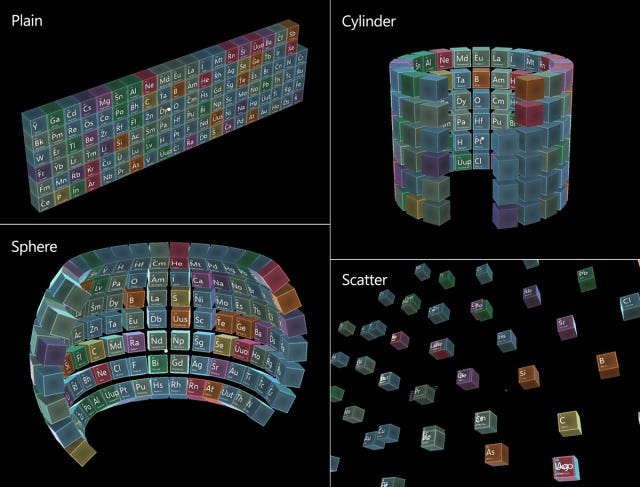

Object Collection

Object Collection is a script which helps you lay out an array of objects in various types of shapes. In MRTK v2, you can find the scripts with updated names: GridObjectCollection.cs

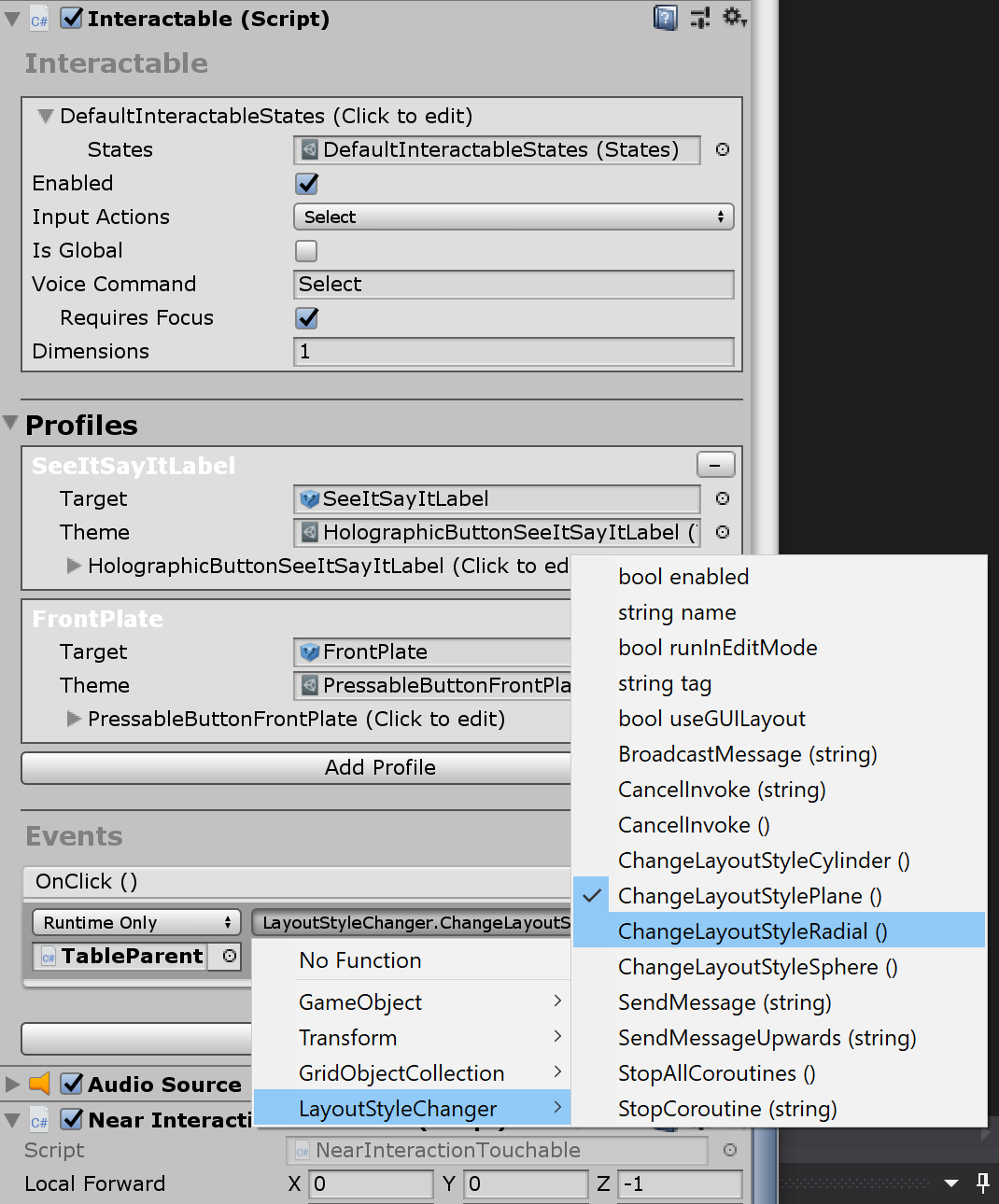

Dynamically generated element objects are located under the TableParent.In the TableParent object, I have replaced old ObjecCollection script with new GridObjectCollection script. To change various properties of the GridObjectCollection through the buttons, I have added a new script LayoutStyleChanger. It simply changes the properties then update the collection.

Using the Interactable component in the PressableButtonPlated, we can easily trigger action using the OnClick() event under the Events section. For each surface type button, I have assigned LayoutStyleChanger script’s ChangeLayoutStyleXXX() method.

Interaction Receiver

In MRTK v2, you can find the same InteractionReceiver class at this location:

InteractionReceiver in MRTK v2 supports additional events related to hand tracking interactions such as TouchStarted and TouchCompleted.

Running in the Unity editor

After resolving the scripts and prefab errors, I was able to run the app in the editor. MRTK v2 supports in-editor input simulation including articulated hand tracking. You can easily test HoloLens input using the keyboard and mouse. For more information on the in-editor input simulation, please refer to this page: Input Simulation Service.

Once you update the app to MRTK v2, the new input system automatically supports available input types. For example, in HoloLens, you can use Gaze and Air-tap gesture to target and select. In HoloLens 2, hand ray will be automatically visualized when a hand is detected. Because of this, now Periodic Table supports both HoloLens 1st gen and HoloLens 2.

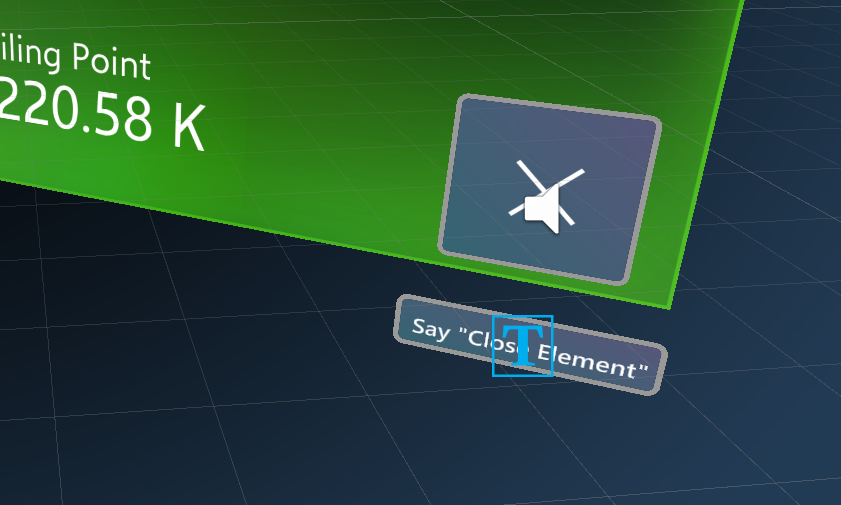

Adjusting the element view position for the near interactions, adding PressableButton for the ‘Close’ action

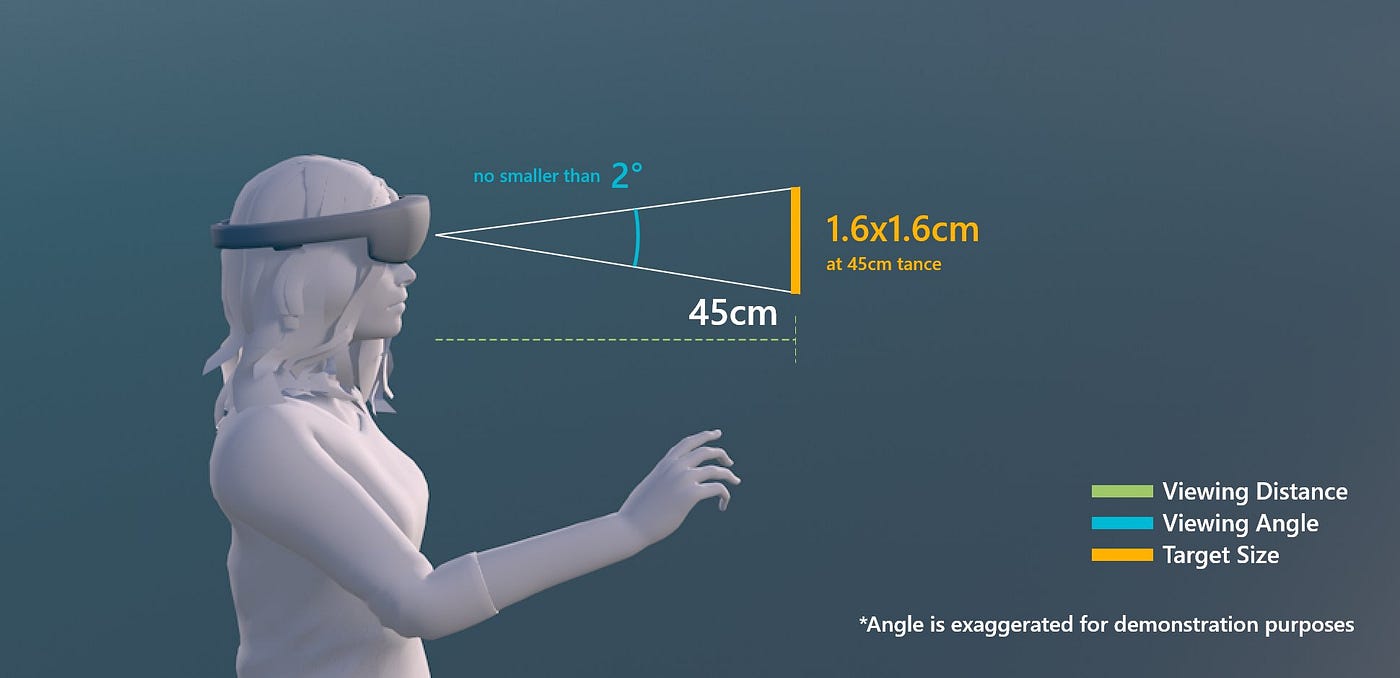

Once an element is focused and selected, the box flies to the user and opens up a 3D model and information panels. Since the original version was designed for the gaze and air-tap, the element was still far from the user. To leverage HoloLens 2’s direct hand input interaction, I have adjusted the element position to the near interaction range which is about 45cm from the user.

The original version had an implicit button around the 3D model for closing the element box with air-tap. Since the information panel and the element object are within the distance for the near interactions, we can use a pressable button for the ‘Close’ action. MRTK’s pressable button has 3.2×3.2cm dimension to support both near and far interactions.

https://cdn.embedly.com/widgets/media.html?src=https%3A%2F%2Fgfycat.com%2Fifr%2Fwiltedterrificfrog&url=https%3A%2F%2Fgfycat.com%2Fwiltedterrificfrog&image=https%3A%2F%2Fthumbs.gfycat.com%2FWiltedTerrificFrog-size_restricted.gif&key=a19fcc184b9711e1b4764040d3dc5c07&type=text%2Fhtml&schema=gfycatClose button in action with hand tracking input simulation

These design guidelines will help you understand HoloLens 2’s input interaction models and recommendations.

Instinctual Interaction

Interactable Object

Direct Manipulation with Hands

Adding HoloLens 2’s articulated hand interaction

One of the most exciting features in HoloLens 2 is articulated hand tracking input. Using this, we can achieve more instinctual interactions by directly interacting with holographic objects.

In the Periodic Table app, the hero content object is the 3D element model. By adding direct manipulation interactions, the user can observe and inspect the model by grabbing it and bringing it closer or by scaling it and rotating it.

Adding direct manipulation is very easy in MRTK v2. The ManipulationHander script handles both one-handed and two-handed manipulation. It also supports both near and far interactions. You can use this for Gaze-based interactions or Hand ray-based interactions too. By simply assigning ManipulationHandler script, you can make any object manipulatable — Grab, Move, Rotate, Scale.

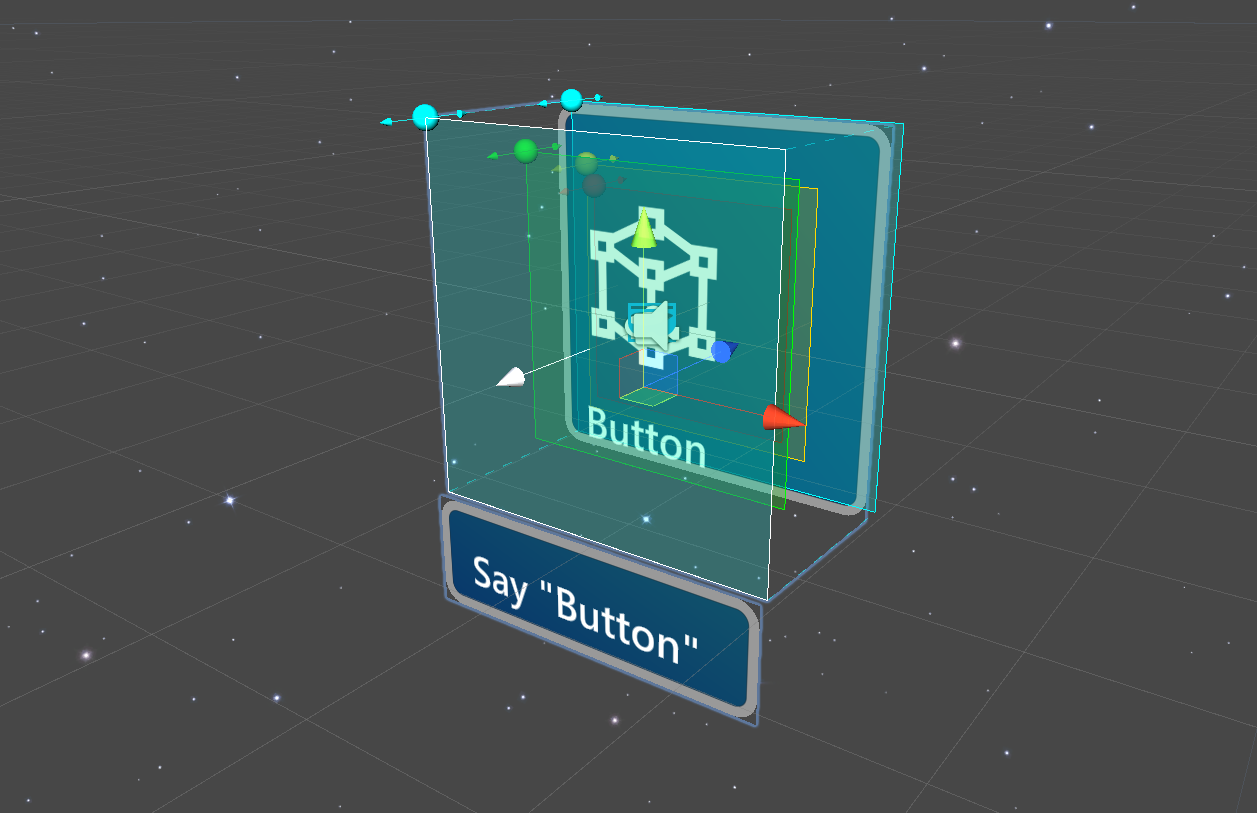

Enabling the Voice Command

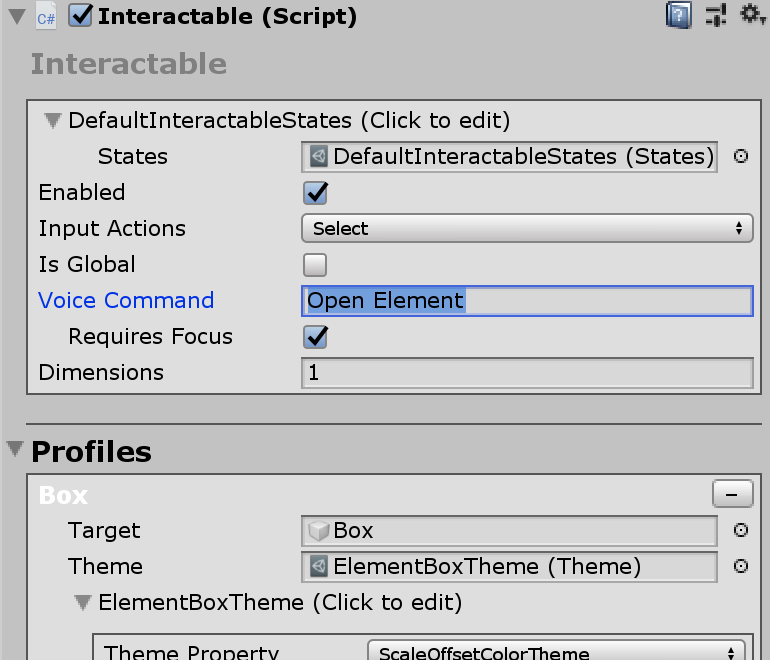

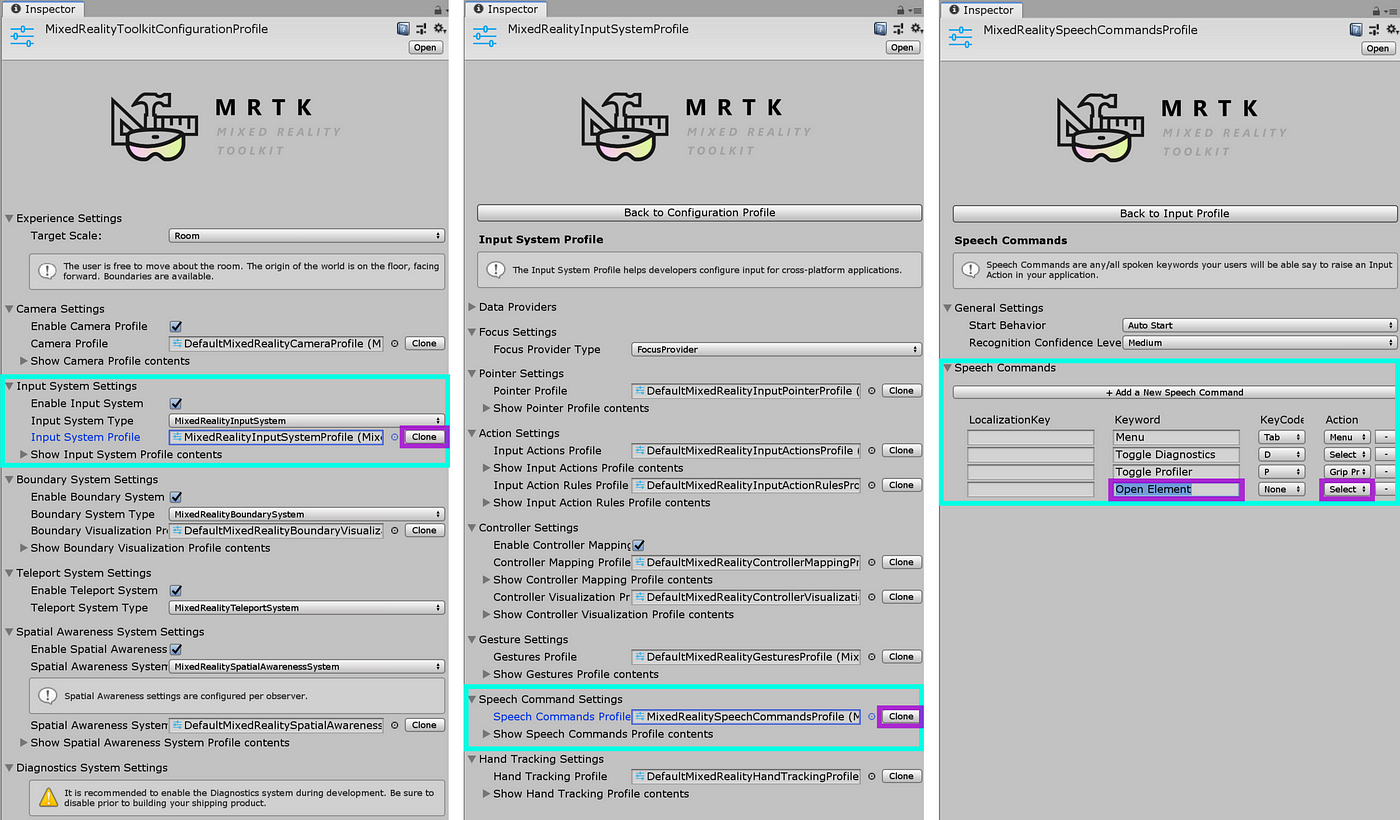

The Interactable script which we used in the Element box provides the voice command functionality. To use this voice command feature, you need to:

- Add the keyword to the Voice Command field under the Interactable.

- Add the keyword to the MixedRealitySpeechCommandsProfile. You can see this profile by selecting MixedRealityToolkit in the Hierarchy panel, then in the Inspector panel, click through MixedRealityInputSystemProfile > MixedRealitySpeechCommandsProfile

*To add your keyword to the MixedRealitySpeechCommandsProfile, you need to clone profile using the ‘Clone’ button.

Once you have added keywords, you can test it in the editor’s game mode using the PC’s microphone.

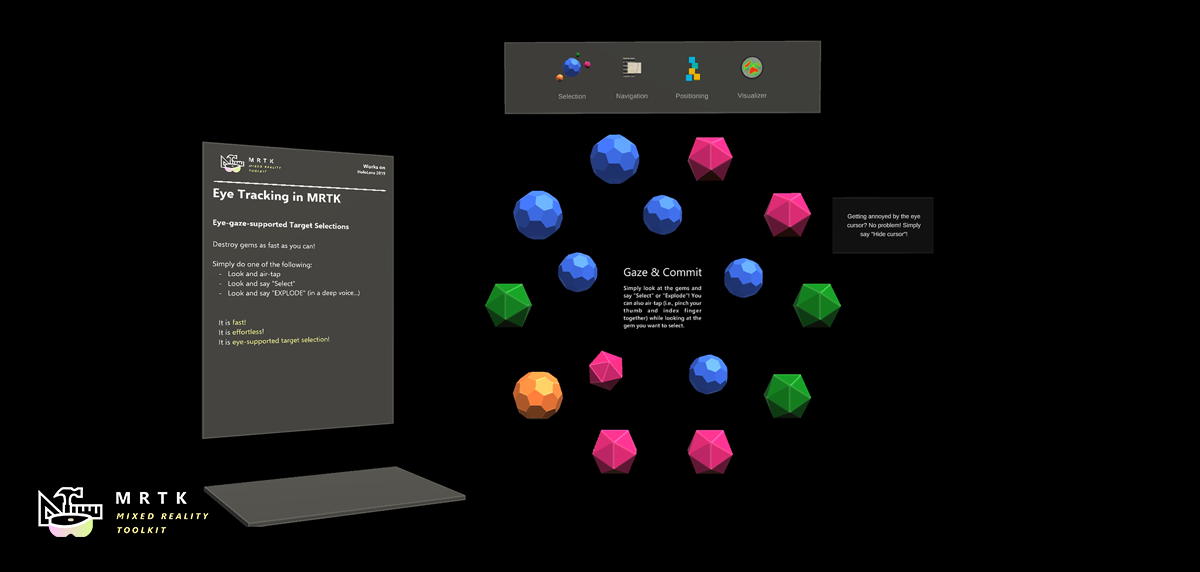

Adding HoloLens 2’s eye tracking input for the automatic text scrolling

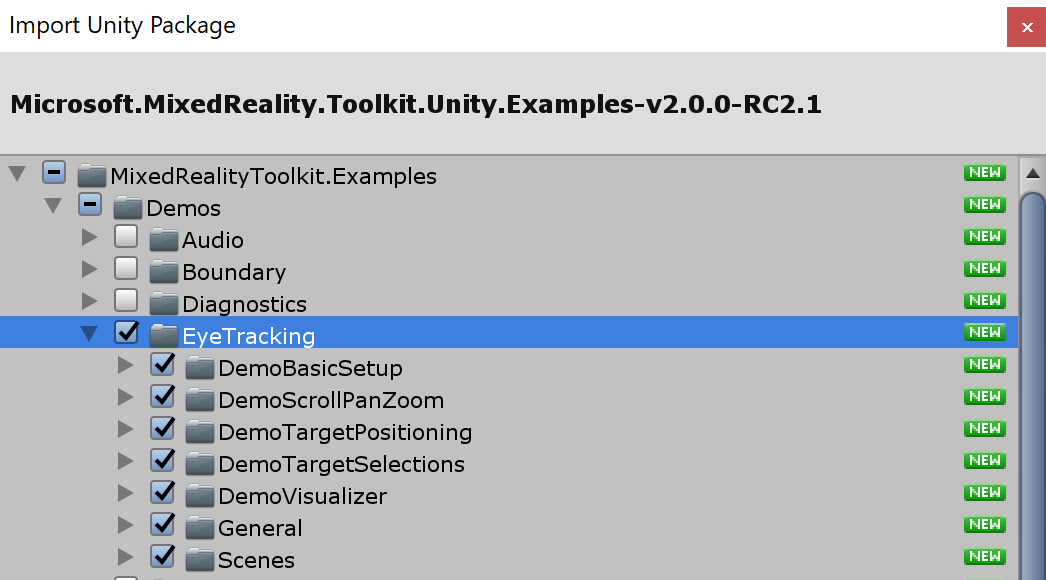

Eye tracking is one of the most exciting features in HoloLens 2. You can learn about various types of eye tracking interactions through the MRTK’s eye tracking examples. You can find these examples in the Microsoft.MixedReality.Toolkit.Unity.Examples-VERSION.unitypackage. The folder is MixedRealityToolkit.Examples/Demos/EyeTracking.

For the Periodic Table of the Elements app, we will use eye tracking’s auto-scrolling feature on the left information panel.

Enabling the Eye Tracking input

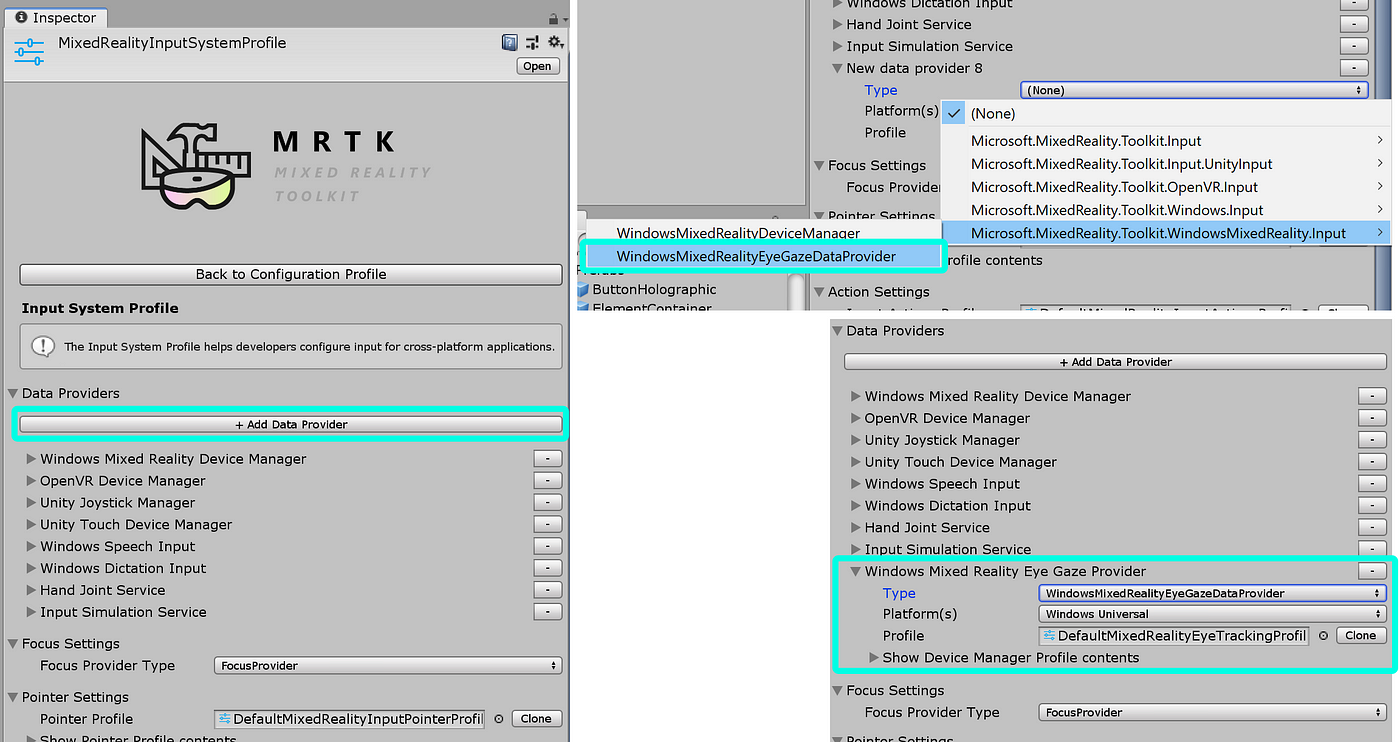

To enable eye tracking input in HoloLens 2, you need to add Eye Gaze Data Provider to the MRTK’s Data Providers under InputSystemProfile. For more details, please refer to this page: Getting started with Eye Tracking

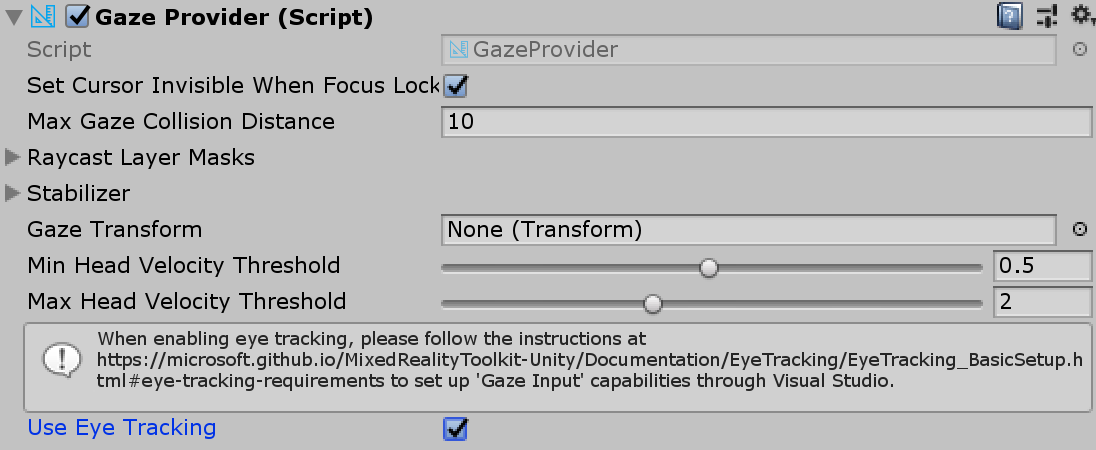

One additional step to enable eye tracking input is the checkbox Use Eye Tracking in the Gaze Provider. You can find the Gaze Provider under MixedRealityPlayspace > Main Camera

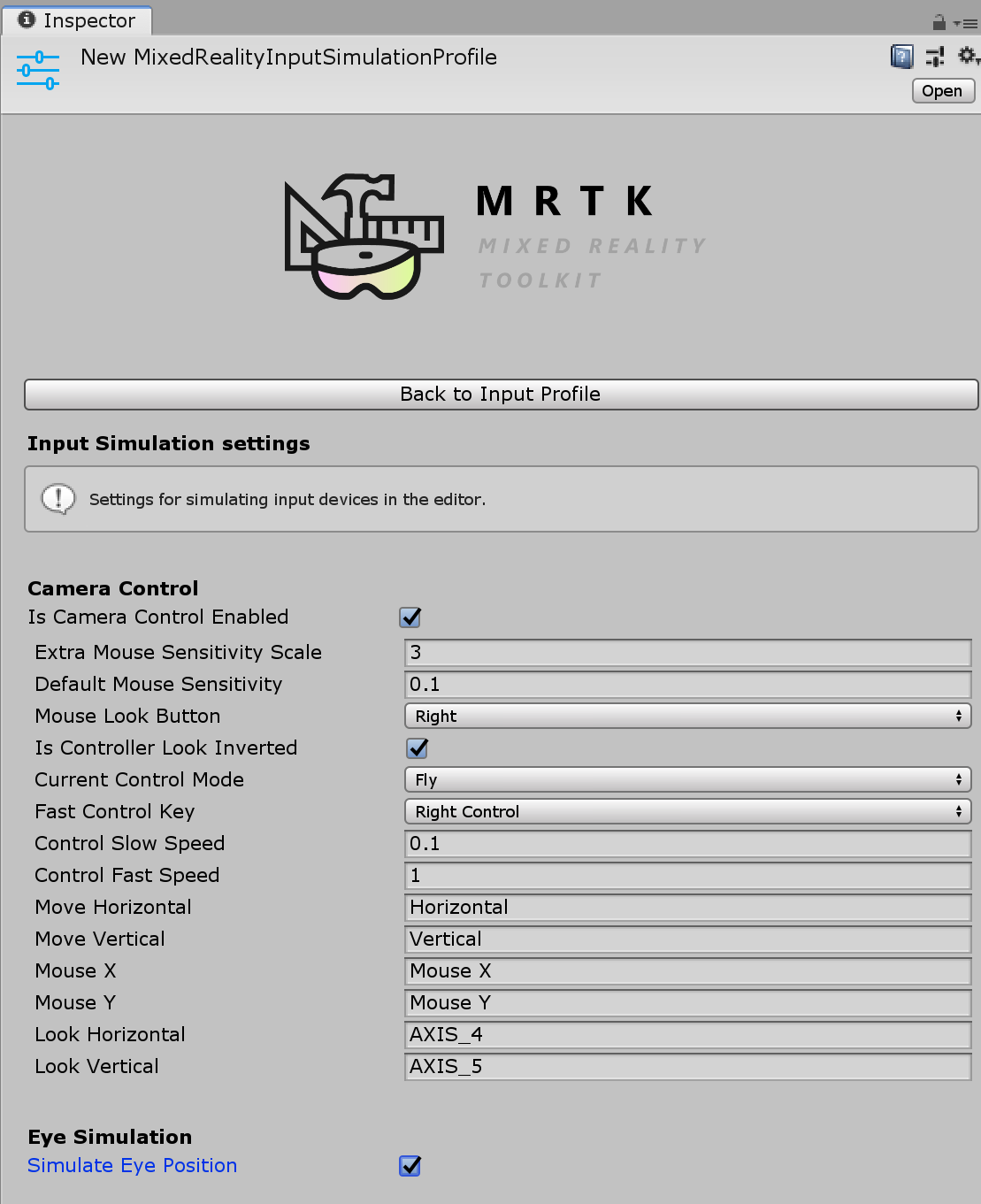

To simulate the eye tracking in the Unity editor, you need to check Simulate Eye Position in the MixedRealityInputSimulationProfile.

To build and deploy to the device, the ‘GazeInput’ capability must be enabled in the Visual Studio’s application manifest.

Auto-scroll with Eye Tracking input

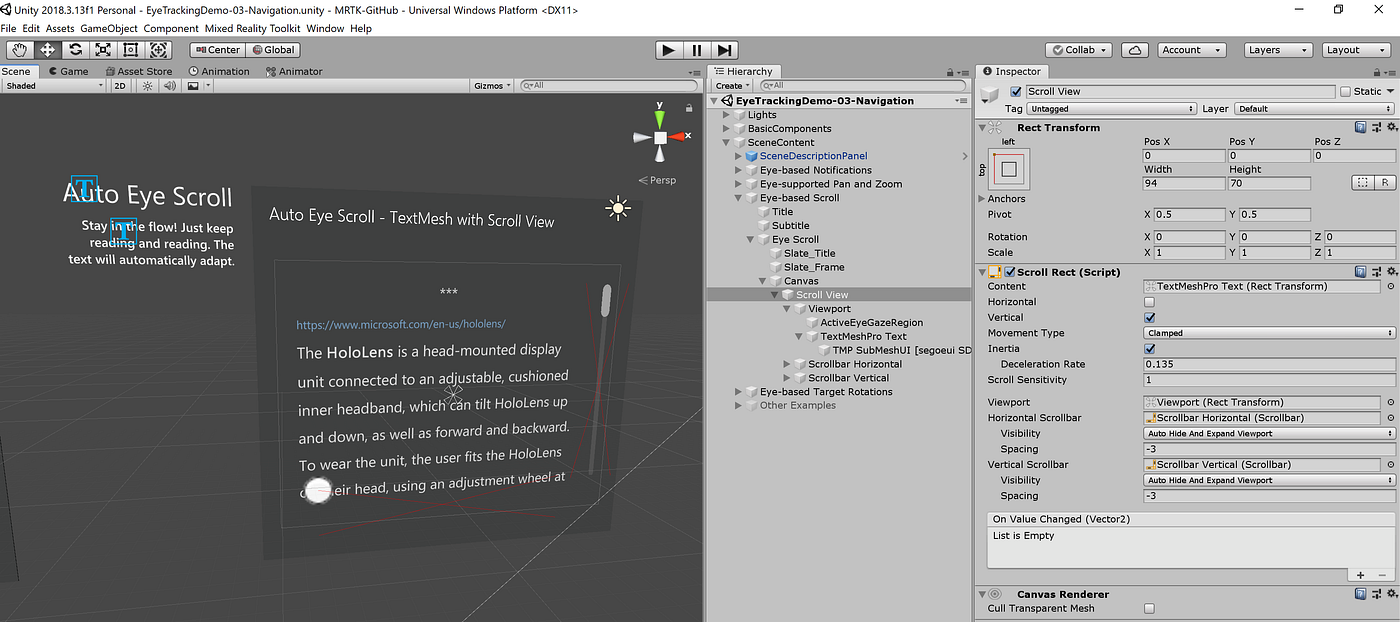

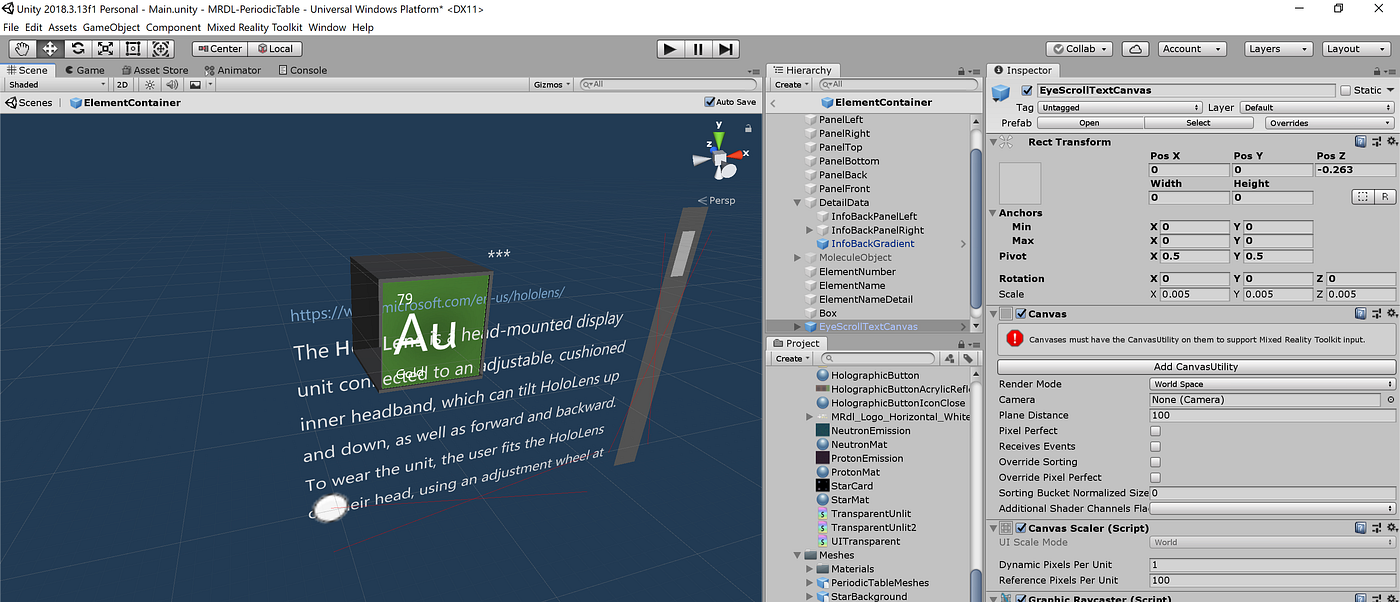

You can find the script and example of making Unity’s TextMesh Pro auto scroll with eye tracking in the EyeTrackingDemo-03-Navigation.unity scene.

You can find the Scroll View component under Eye-based-Scroll > Eye Scroll > Canvas.

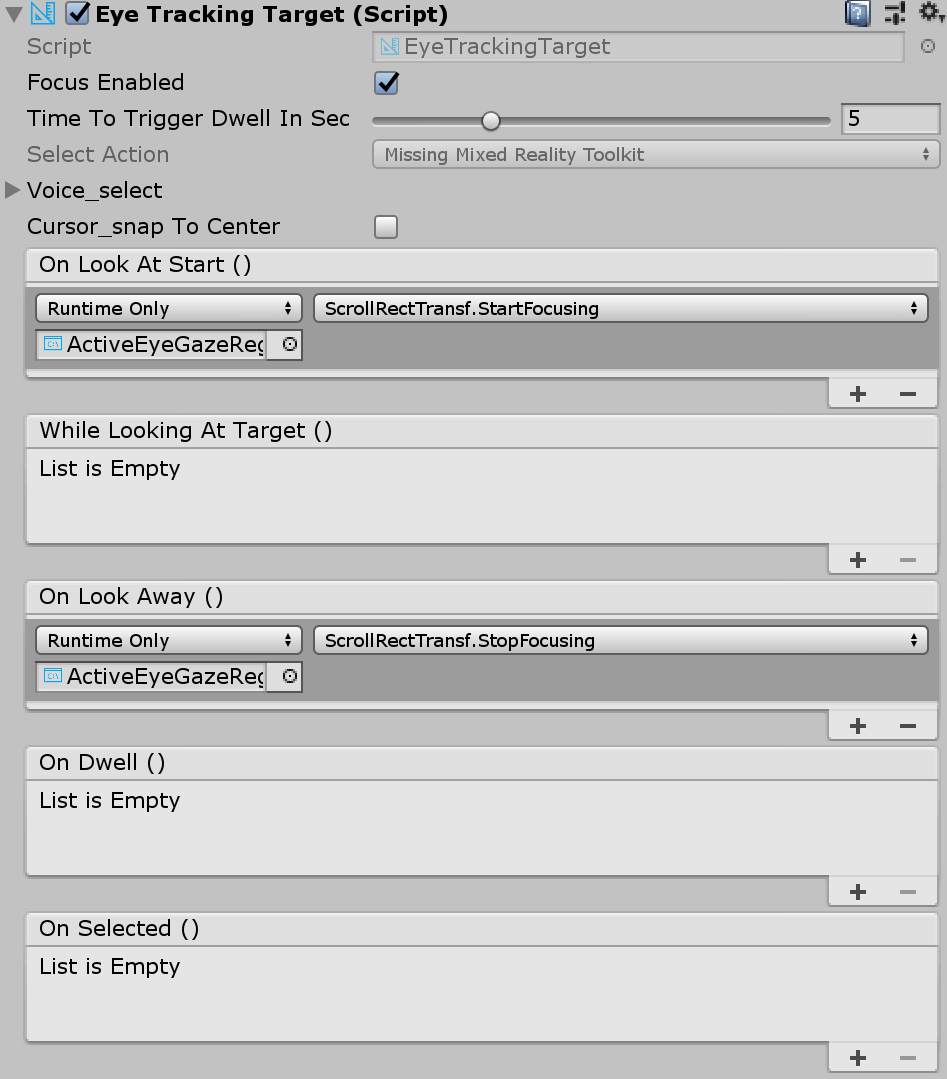

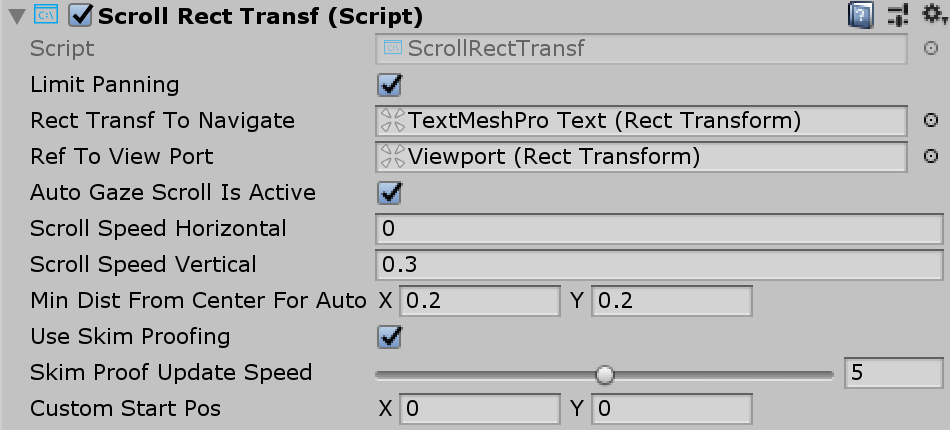

The ActiveEyeGazeRegion object has the scripts EyeTrackingTarget and ScrollRectTransf. You can see the script provides various options.

From MixedRealityToolkit.Examples package, I imported the EyeTracking folder(MixedRealityToolkit.Examples/Demos/EyeTracking) into the Periodic Table app project.

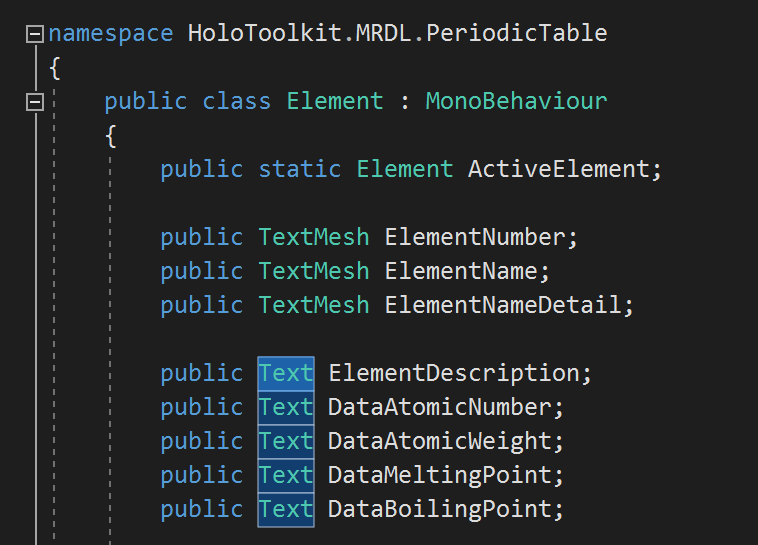

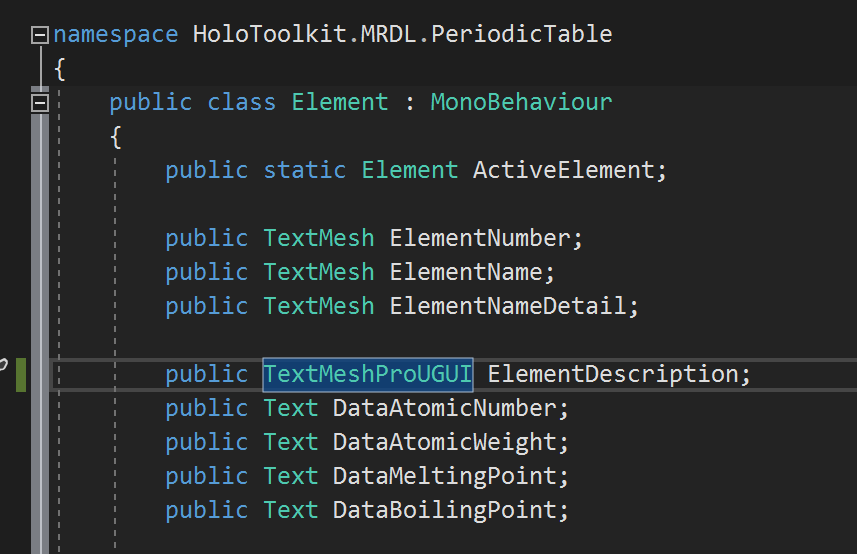

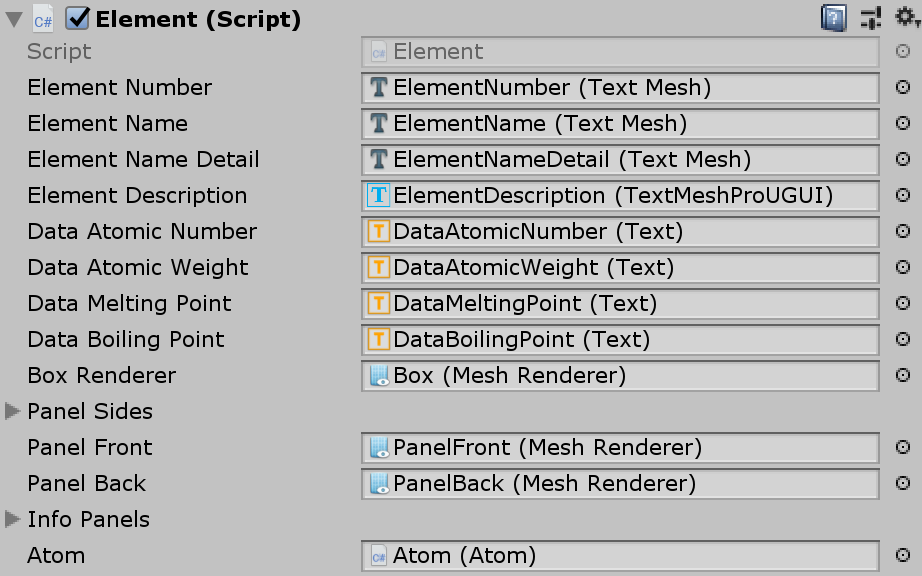

Since the original Periodic Table app used TextMesh for the text display, I have updated the code to use TextMesh Pro.

When you run the app in the editor, you can see the text automatically scroll based on your eye gaze position. On the device, you can feel more natural scrolling when you just continue reading the text.

Project source code repository

You can find the Periodic Table of the Elements app in the Mixed Reality Design Lab’s GitHub. The branch name is ‘master’. Currently, it is based on MRTK RC2.1 It was tested with Unity 2018.3.13f1microsoft/MRDesignLabs_Unity_PeriodicTablePeriodic Table of the Elements for HoloLens and Immersive headset – microsoft/MRDesignLabs_Unity_PeriodicTablegithub.com

Other Stories

-

Designing Type In Space for HoloLens 2

Background As a designer passionate about typography and spatial computing, I’ve long been captivated by […]

-

MRTK 2 – How to build crucial Spatial Interactions

Learn how to use MRTK to achieve some of the most widely used common interaction […]

-

How to use Meta Quest 2/Quest Pro with MRTK3 Unity for Hand Interactions

With the support of OpenXR, it is now easy to use MRTK with Meta Quest […]

-

Bringing the Periodic Table of the Elements app to HoloLens 2 with MRTK v2

Sharing the story of updating HoloLens app made with HoloToolkit(HTK) to use new Mixed Reality […]