Mixed Reality blends computer-generated digital content with the real-world physical environment. With advancements in technology, digital content can interact with the physical environment. For example, you can place a digital 3D model on top of the physical table’s surface or a 3D ball can be bounced off the physical floor. The term “Mixed Reality” was introduced by Paul Milgram and Fumio Kishino in the paper “A Taxonomy of Mixed reality Visual Displays (1994).

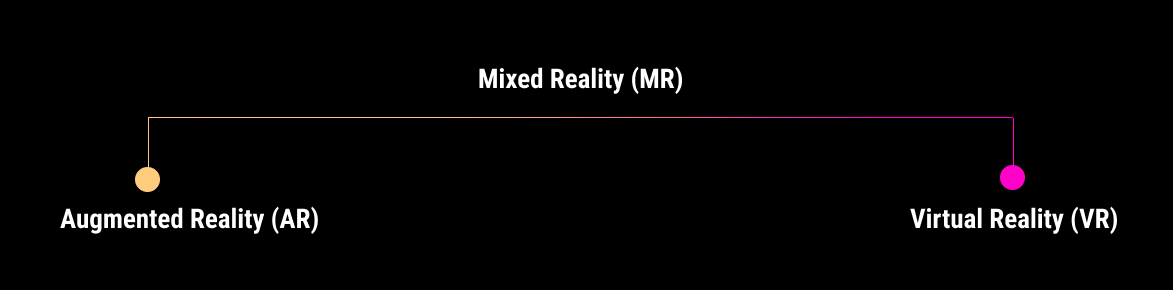

Mixed Reality, Augmented Reality, and Virtual Reality

You can think of Mixed Reality as an umbrella term, a spectrum covering both Augmented Reality (AR) and Virtual Reality (VR) experiences.

In Augmented Reality, the digital graphics are overlaid on top of the real world around us through transparent lenses (e.g. Microsoft HoloLens, Magic Leap) or a pass-through camera (Meta Quest Pro). You can still see the physical environment just like wearing glasses.

With Augmented Reality, since you can see the physical environment and objects, applications enhance the real world with digital information. Guided training, wayfinding, and 3D model reviewing are some of the great examples of AR.

In Virtual Reality, your eyes are fully covered by opaque lenses (Meta Quest, HTC Vive, Samsung Odyssey), surrounded by a digital world. You cannot see the surroundings and you are fully immersed in the virtual world.

XR, Extended Reality

The term XR is often used to encompass a variety of immersive technologies, including VR, AR, and MR, as well as other related technologies such as holographic displays and haptic (touch) feedback. It is used to describe a broad range of applications and experiences that involve the combination of real and virtual elements, and that allows users to see, hear, and interact with the world in new and innovative ways.

Key elements of Mixed Reality

Tracking

Maintaining accurate registration between real and computer-generated digital objects is one of the most important elements in Mixed Reality. To achieve this, it is crucial to have an accurate tracking system to track the position and orientation of the user with respect to the real world. Tracking techniques can be classified into two main approaches: sensor-based and visual-based. The sensor-based approach uses data provided by sensors such as ToF (Time-of-flight) depth sensor, accelerometers, gyroscopes, and magnetometers to track the user’s pose whereas the visual-based approach leverages images captured by the camera and their feature points.

Input methods

Mixed Reality devices support various types of input methods such as hand-tracking, eye-tracking, and speech input. As technology advances, more natural interactions are becoming possible allowing users directly interact with digital objects with their hands or use multi-modal input by combining multiple input methods.

Spatial Mapping (also called Surface Reconstruction or Meshing)

With spatial mapping, the device can construct the 3D geometry of the surrounding environment. Using this data, Mixed Reality apps can interact with the surface of the real world and provide realistic experiences. For example, with spatial mapping data, the app can place a digital object on top of the physical table. With spatial mapping, the digital content can be occluded by the physical environment as well.

Spatial Audio

In Mixed Reality, spatial audio can provide cues and make the experience more realistic. Just like real-life objects, spatialized audio gives the user a directional sense, and helps the user understand where the object is located in the 3D space.

Applications – Where are they being used?

Education

Case Western Reserve University uses Mixed Reality for medical education. Multiple students can join and view 3D holographic anatomy models regardless of their location. In the COVID-10 pandemic situation, students used Microsoft HoloLens with HoloAnatomy software to remotely join the classes.

Architectural Design and construction site BIM data visualization

Trimble uses Mixed Reality to allow the users to visualize and interact with 3D data onsite from any angle at a true-to-life scale. A shared 3D model in context makes it easy to collectively design and communicate ideas. Onsite construction visualization empowers field workers to make informed decisions.

Automotive

Ford uses Mixed Reality in their design process of the cars. As a shared experience, multiple users can view the design explorations as an overlay on top of the physical model, and leave voice annotations for other reviewers.

Aerospace

NASA used Mixed Reality for designing Mars Perseverance Rover. Being able to see the 3D models on a 1:1 scale and look inside the components helped the team identify challenges and iterate the design.

Airbus uses Mixed Reality to accelerate the design and manufacture of aircraft.

Medical

Mixed Reality is being actively adapted to medical field. Medical data such as CT scans can be overlaid on top of the patient for surgery or multiple virtual screens can be floating around the doctor for easier information access.

Remote Assist

In Thyssenkrupp, with Mixed Reality, 24,000 elevator service technicians can visualize and identify problems ahead of a job, and have remote, hands-free access to technical and expert information when onsite without needing to open up a laptop or physical documents. Using the front-facing camera of HoloLens, the site video can be streamed in real time for easier communication.