Simultaneous Localization and Mapping (SLAM) is a technology that allows a device, such as a robot or a self-driving car, to create a map of its environment while simultaneously determining its own location within that map. This enables the device to navigate and understand its surroundings in real time.

In order to perform SLAM, the device typically uses a combination of sensors, such as cameras, lasers, or radar, to gather data about the environment. It then processes this data using algorithms to create a map and determine its own location within the map. The map and the location estimates are updated continuously as the device moves through the environment.

SLAM is a challenging problem because it involves simultaneously solving two separate tasks: localization (determining the device’s location) and mapping (constructing a map of the environment). It also requires the device to be able to handle uncertainty and make informed decisions in the face of incomplete or noisy data.

How SLAM is used for Mixed Reality?

SLAM can be used to enable mixed reality experiences by allowing a device, such as a smartphone or a headset, to understand the layout of physical space and to accurately place virtual objects within that space. This can be accomplished by using SLAM algorithms to create a map of the physical environment and to determine the device’s location within the map.

For example, in an MR application, a user wearing a headset might see a virtual table appear in their living room, with virtual objects placed on top of it. The headset uses SLAM technology to determine the location and orientation of the physical table and to accurately place the virtual objects on top of it. The user can then walk around the table and interact with the virtual objects as if they were real, thanks to the accuracy of the SLAM-based mapping and localization.

SLAM is a key technology for enabling Mixed Reality experiences because it allows devices to understand and interact with the physical world in a more natural and intuitive way. It has the potential to revolutionize how we interact with computers and digital content and to create new opportunities for education, entertainment, and communication.

How Mixed Reality devices achieve SLAM?

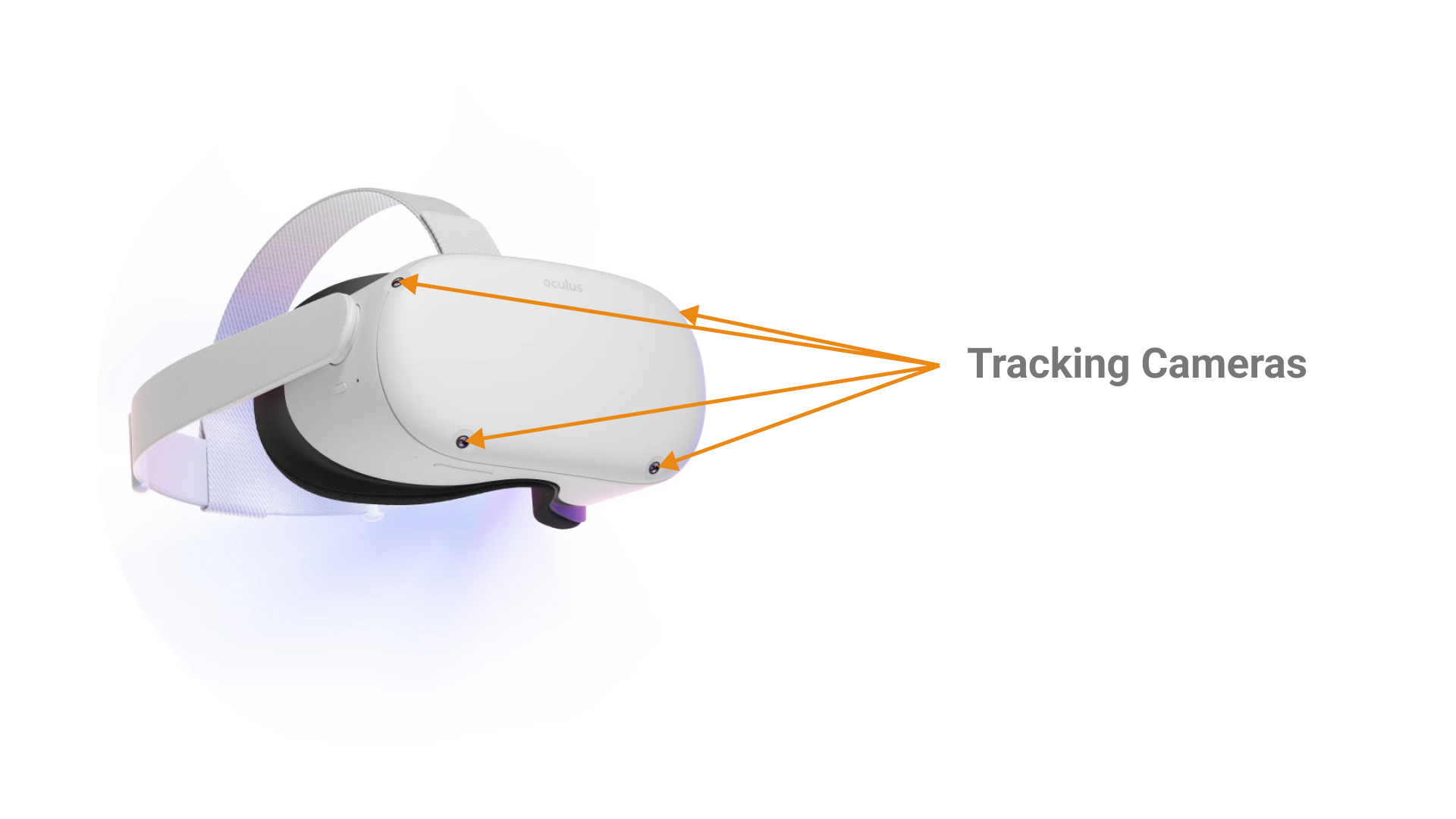

Mixed reality (MR) devices, such as MR headsets or smartphones, use similar methods to virtual reality (VR) devices to achieve simultaneous localization and mapping (SLAM). These devices typically have one or more cameras that are used to gather data about the user’s surroundings, as well as inertial measurement units (IMUs) that measure the device’s orientation and movement.

To create a map of the environment and determine the device’s location within the map, the MR device uses algorithms to process the data from the cameras and IMUs. This typically involves comparing the data to a database of known features and landmarks, and using techniques such as feature matching and pose estimation to determine the device’s position and orientation.

The MR device also uses algorithms to correct for any errors or drift in the location and orientation estimates, ensuring that the map and the device’s location remain accurate over time. This is important for correctly placing virtual objects in the physical space, as any discrepancies between the real and virtual environments can break the illusion of the MR experience.

In addition to creating a map of the environment, MR devices also use SLAM technology to track the user’s movement and to correctly place virtual objects in the physical space. This allows the user to interact with the virtual elements as if they were physically present in the real world, enhancing the sense of immersion in the MR experience.